Expandable List

Poster:

Citation:

Spyra, J., Swierczek, K., & Woolhouse, M. H. Intervening chromaticism and harmonic memory in the nonadjacent key paradigm. Poster at Society for Music Perception & Cognition. Portland, OR, 4-7 August 2022.

Abstract:

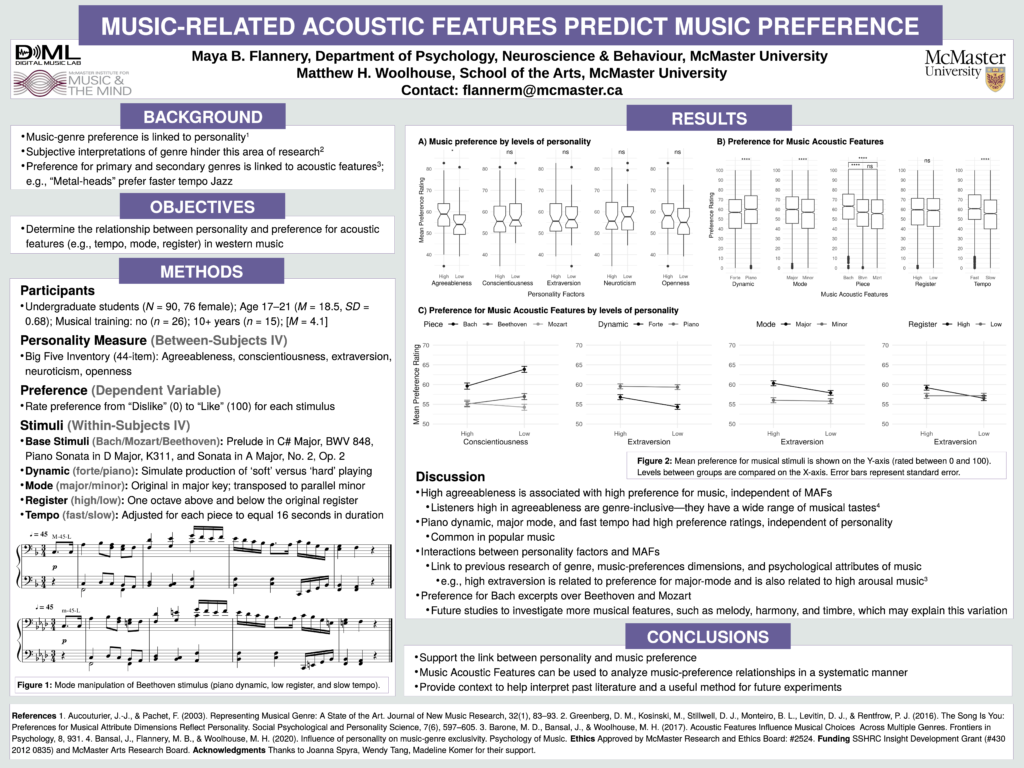

Memory for temporally nonadjacent musical keys decays by 20 seconds after modulation (Farbood, 2016; Woolhouse et al., 2016). However, many musical characteristics—from timbre to harmony itself—influence harmonic memory. In fact, memory for a musical key is significantly disrupted by an intervening excerpt in another, highly tonal key (Farbood, 2016), as opposed to a randomized sequence of chords or, most simply, a single repeated chord. However, studies such as Spyra et al. (2021) and Woolhouse et al. (2016) have used this paradigm with functional diatonic intervening sections (i.e., excerpts that have an unambiguous tonal center); both studies produced robust results, contrary to the predictions made by studies such as Farbood (2016). The current study addresses this conflicting evidence. A nonadjacent key paradigm was used in which: (1) a nonadjacent section established a musical key, (2) an intervening section modulated to a different key, and (3) a probe cadence was played after a small pause and was rated for goodness-of-completion. The keys could be congruent, in which sections 1 and 3 were in the same key, or incongruent in which all three sections were composed in different keys. Incongruent stimuli should always result in low ratings of completion as there is no harmonic continuity between sections. The intervening section was manipulated in two ways: it could be composed in a diatonic or chromatic scale, and this scale was either functional (i.e., it followed traditional Western harmonic rules) or nonfunctional in which each chord was pseudo-randomly transposed. Ratings were higher for functional diatonic stimuli than for nonfunctional diatonic, functional chromatic, or nonfunctional chromatic, contrary to previous research that has shown no significant traces of memory in such conditions. The converging evidence from these studies suggest that harmonic memory is not perturbed by tonality and may, in fact, be bolstered by it.

Farbood, M. (2016). Memory of a tonal center after modulation. Music Perception, 34(1), 71-93. https://doi.org/10.1525/mp.2016.34.1.71

Spyra, J., Stodolak, M., & Woolhouse, M. (2021). Events versus time in the perception of nonadjacent key relationships. Musicae Scientae, 25(2), 212-225. https://doi.org/10.1177/1029864919867463

Woolhouse, M., Cross, I., & Horton, T. (2016). Perception of nonadjacent tonic-key relationships. Psychology of Music, 44(4), 802-815. https://doi.org/10.1177/0305735615593409

Poster:

Citation:

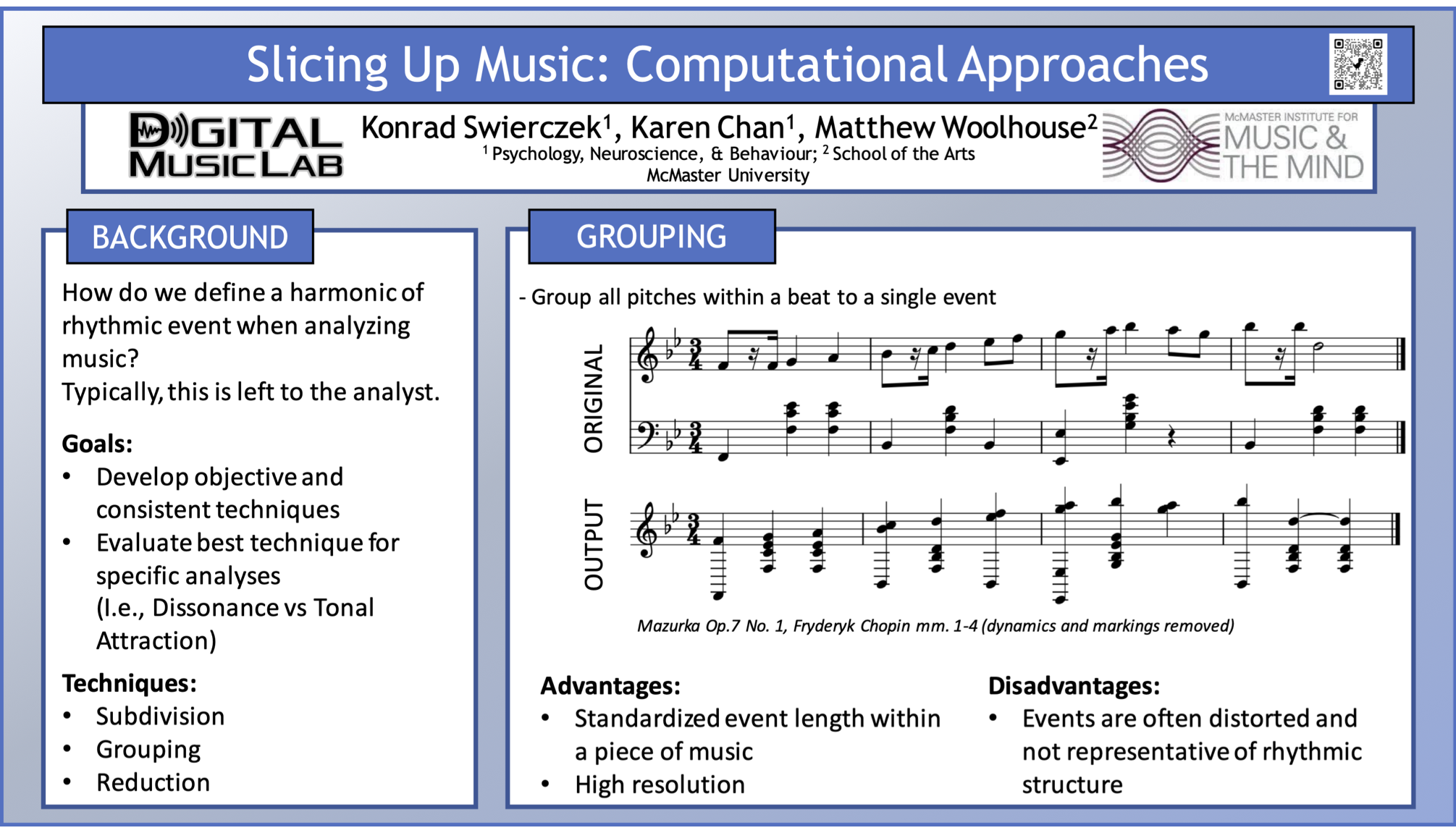

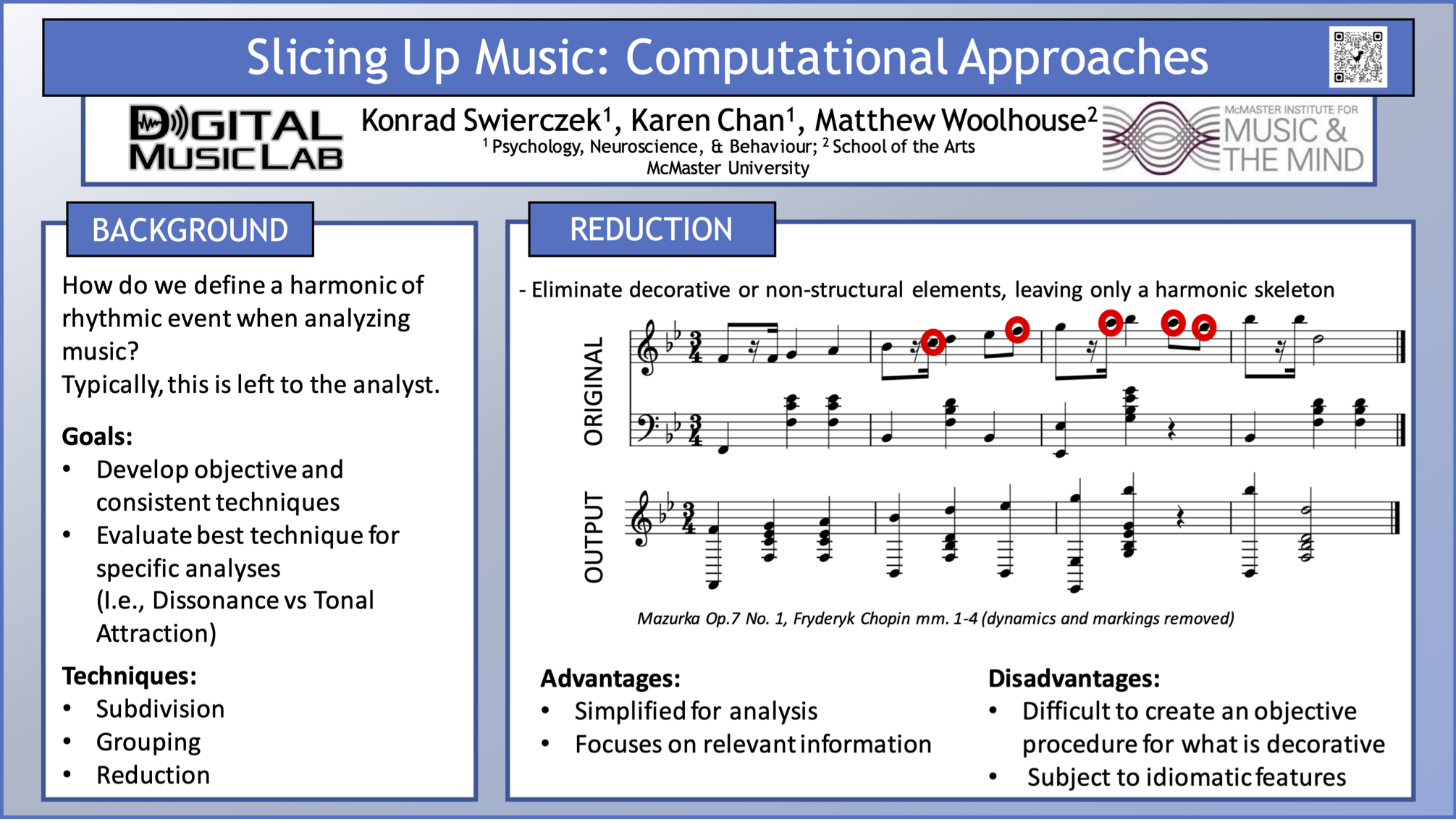

Swierczek, K., Chan, K. & Woolhouse, M. H. c Poster at 17th Annual NeuroMusic Conference. McMaster University, Canada, 20 November 2021.

Abstract:

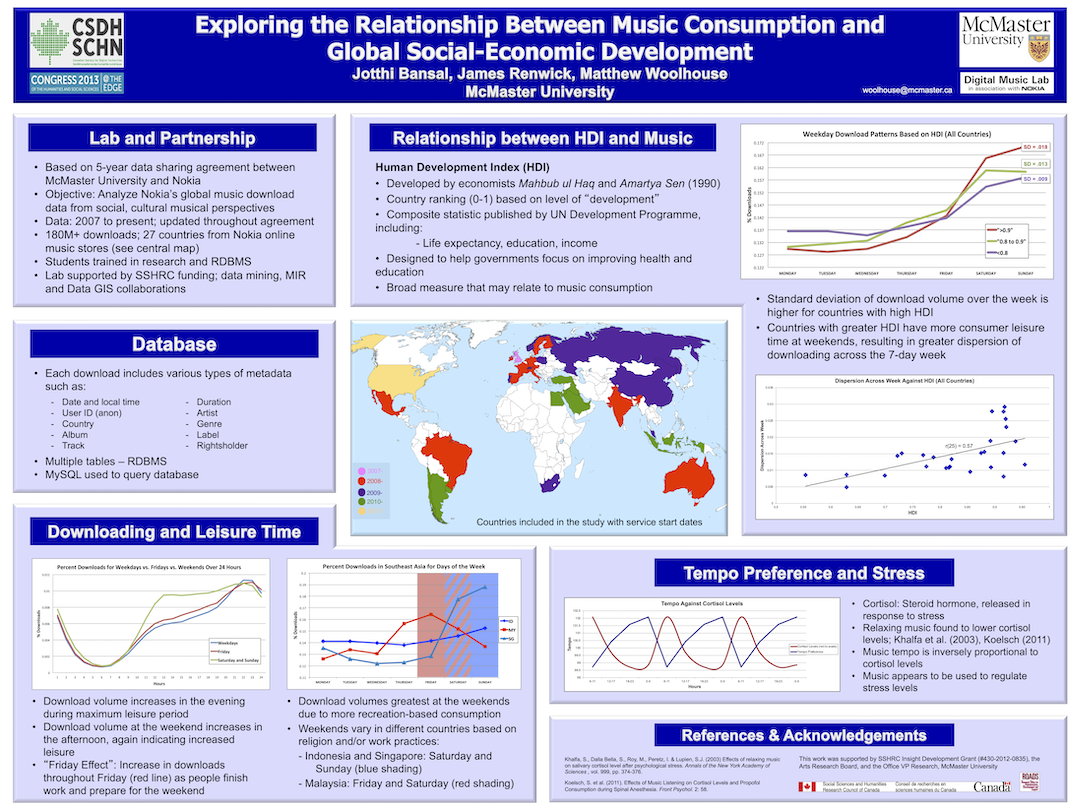

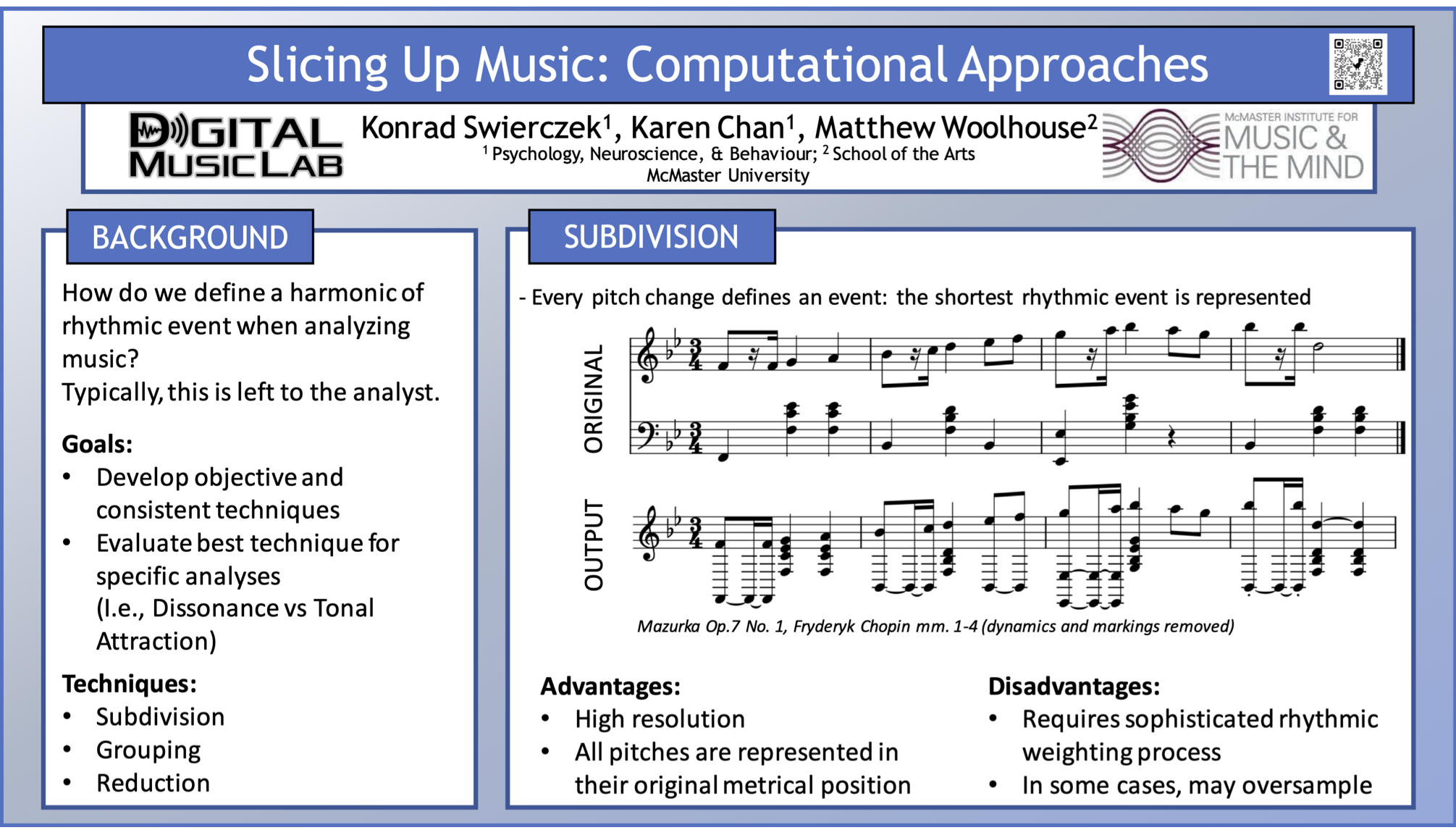

Traditional music theory-based analysis often involves a high degree of subjective judgments and variability between researchers: individual analysts differ in how they define individual harmonic or rhythmic events. While this does not present issues for individual analyses, these results may not be reflective of how music is perceived in any population beyond the researcher who performed the analysis. With the increasing availability of complex computational models for music analysis rooted in the disciplines of music cognition and empirical musicology, consistent and objective methods of defining events in symbolically notated music (in this case, western staff notation) are required. More specifically, computational methods with a high degree of generalizability and automation are necessary to facilitate corpus analysis with minimal analyst/researcher intervention. Three such methods are explored: subdivision to the shortest rhythmic event, grouping to a harmonic rhythm or tactus, and reduction of non-structural elements (i.e., figuration). While each of these methods has advantages and disadvantages, integrating all three approaches may yield the most representative output for defining harmonic events in western symbolic notation. The output of these computational methods are presently being applied to perform corpus analysis using psychological models of dissonance, voice leading, tonal attraction, and key finding.

Poster:

Citation:

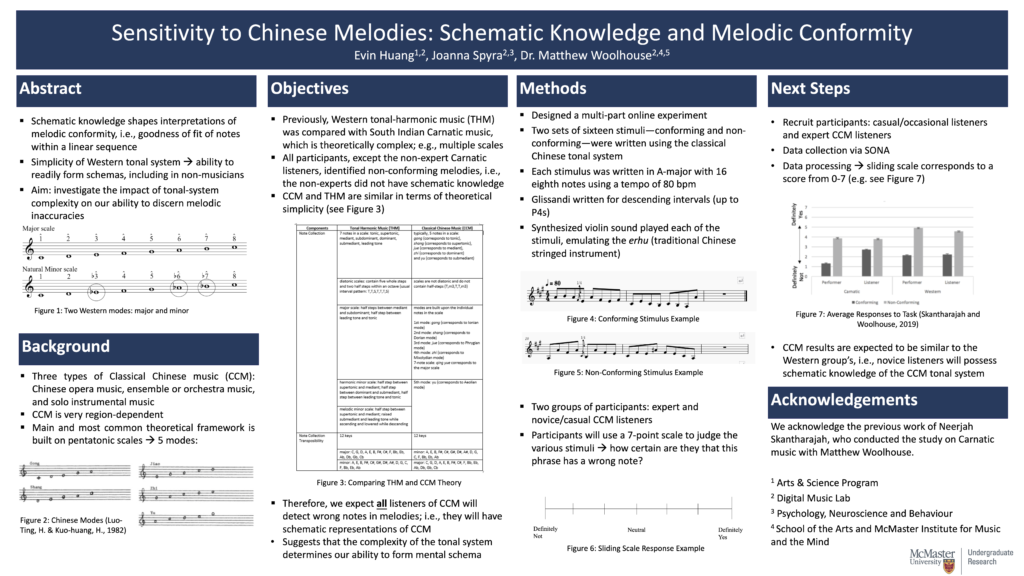

Huang, E., Spyra, J. & Woolhouse, M. H. Sensitivity to Chinese melodies: Schematic knowledge And melodic conformity. Poster at 17th Annual NeuroMusic Conference. McMaster University, Canada, 20 November 2021.

Abstract:

Our schematic knowledge helps to shape our interpretations of melodic conformity, i.e., the goodness of the fit of notes within a linear sequence. Previous studies have shown that people unfamiliar with a particular modal system were able to acquire short-term schematic knowledge to anticipate upcoming events. Arguably, this can be explained by the relatively constrained nature of Western tonality: the Western tonal-harmonic system, which is essentially built upon two modes, allowing non-musicians to schematically learn it through enculturation. This experiment investigates the impact of tonal-system complexity on our ability to discern inaccuracies in melodies, i.e., wrong notes, and thus our schematic knowledge. Previously, we compared Carnatic music—which is comparatively theoretically complex—and Western tonal music using experienced and non-experienced listeners. Two sets of novel stimuli for each tonal system—either conforming or non-conforming—were presented. Results showed that all groups had the ability to identify non-conforming melodies, except for the inexperienced Carnatic listeners, suggesting that they failed to possess schematic knowledge. Using the same design, we will examine whether experienced and non-experienced listeners of classical Chinese music—also, a relatively constrained system—are able to discern inaccuracies using schematic knowledge. Given the above, we expect that both experienced and inexperienced listeners of classical Chinese music will be sensitive to melodic non-conformity, which, in turn, will suggest that the simplicity/complexity of tonal systems determines listeners’ ability to form schematic representations of its structural properties. Results consistent with this hypothesis will inform future stimulus creation, music composition, and shape our understanding of musical knowledge and enculturation within cross-cultural contexts.

Poster:

Citation:

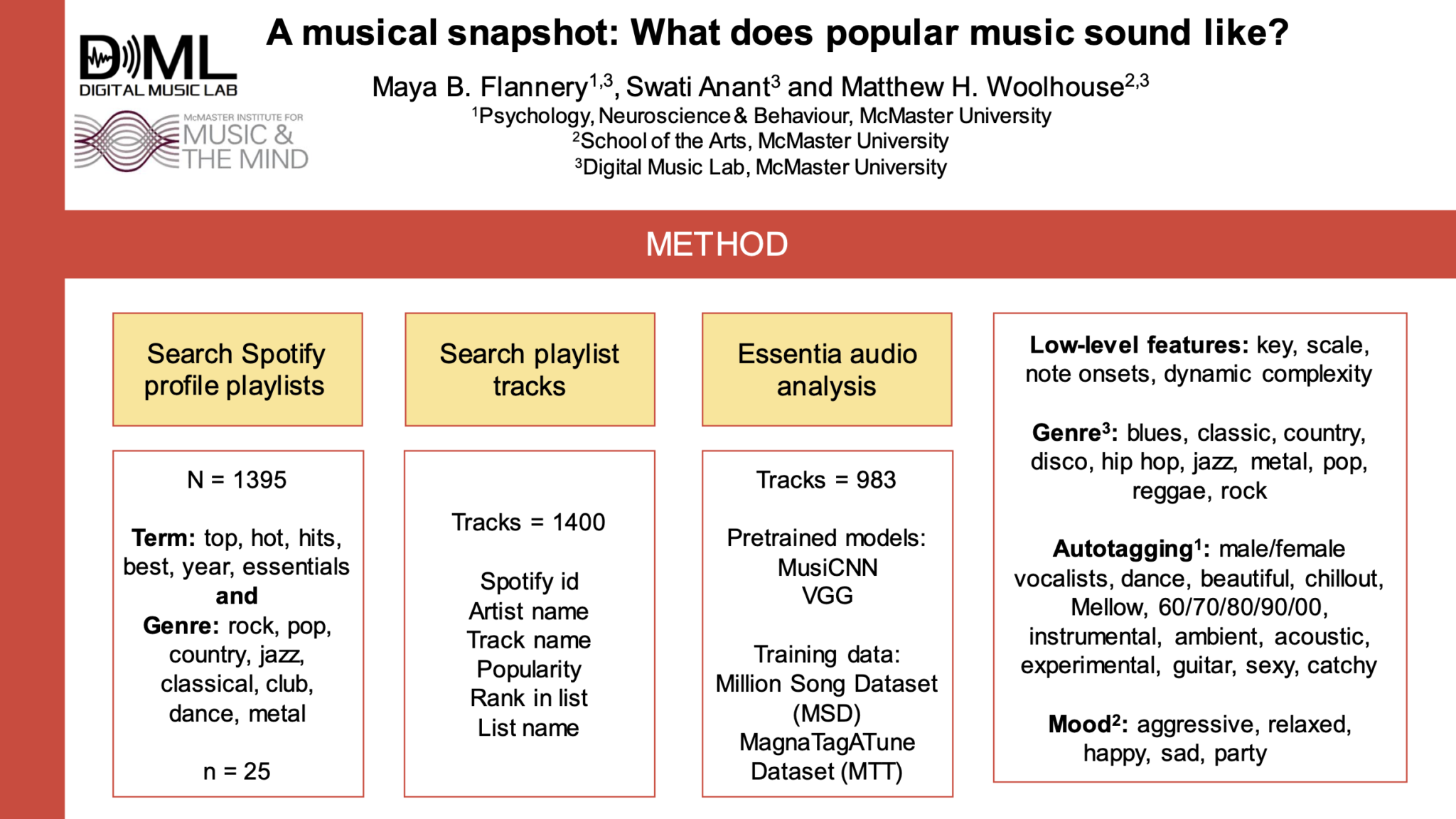

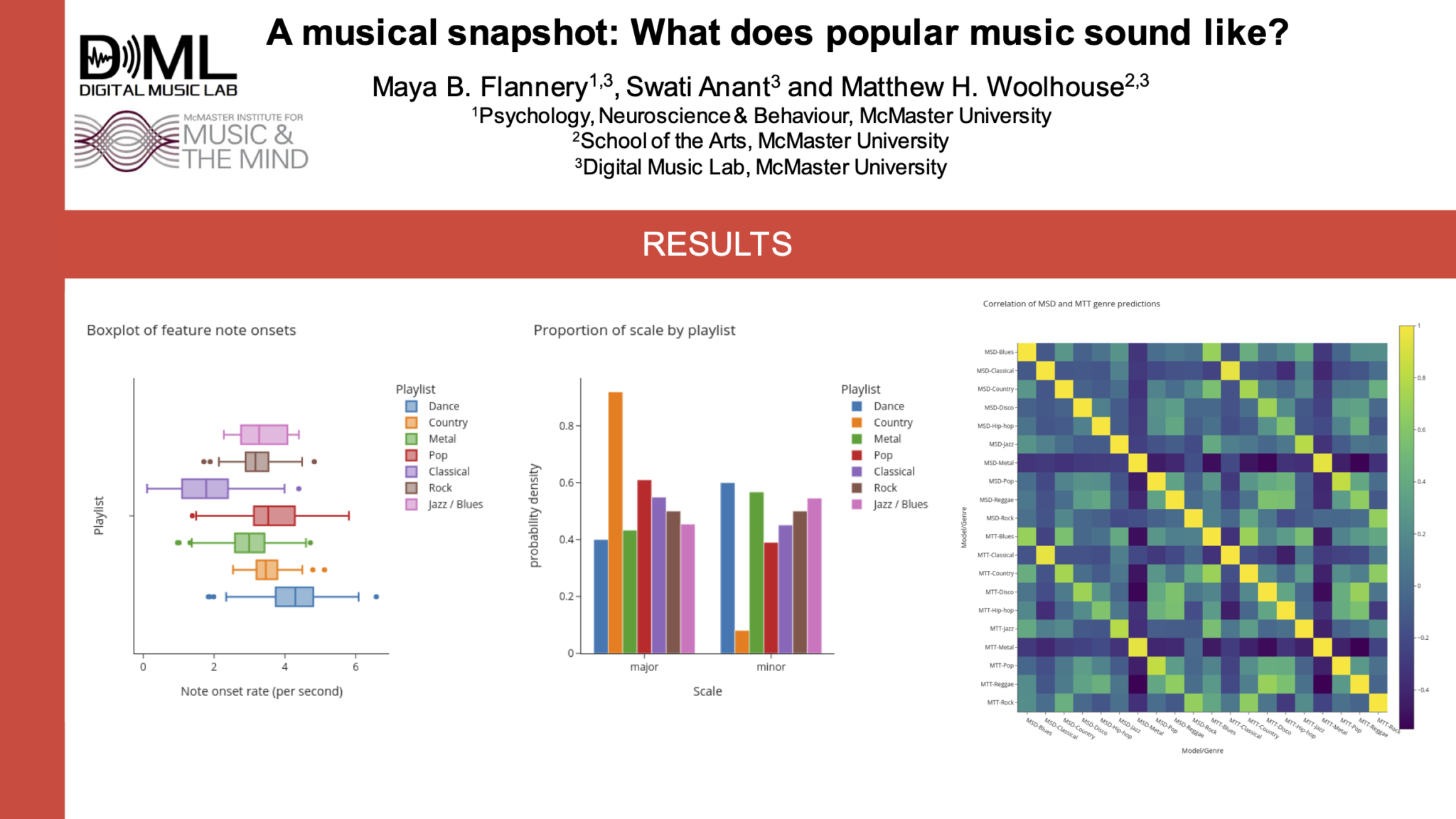

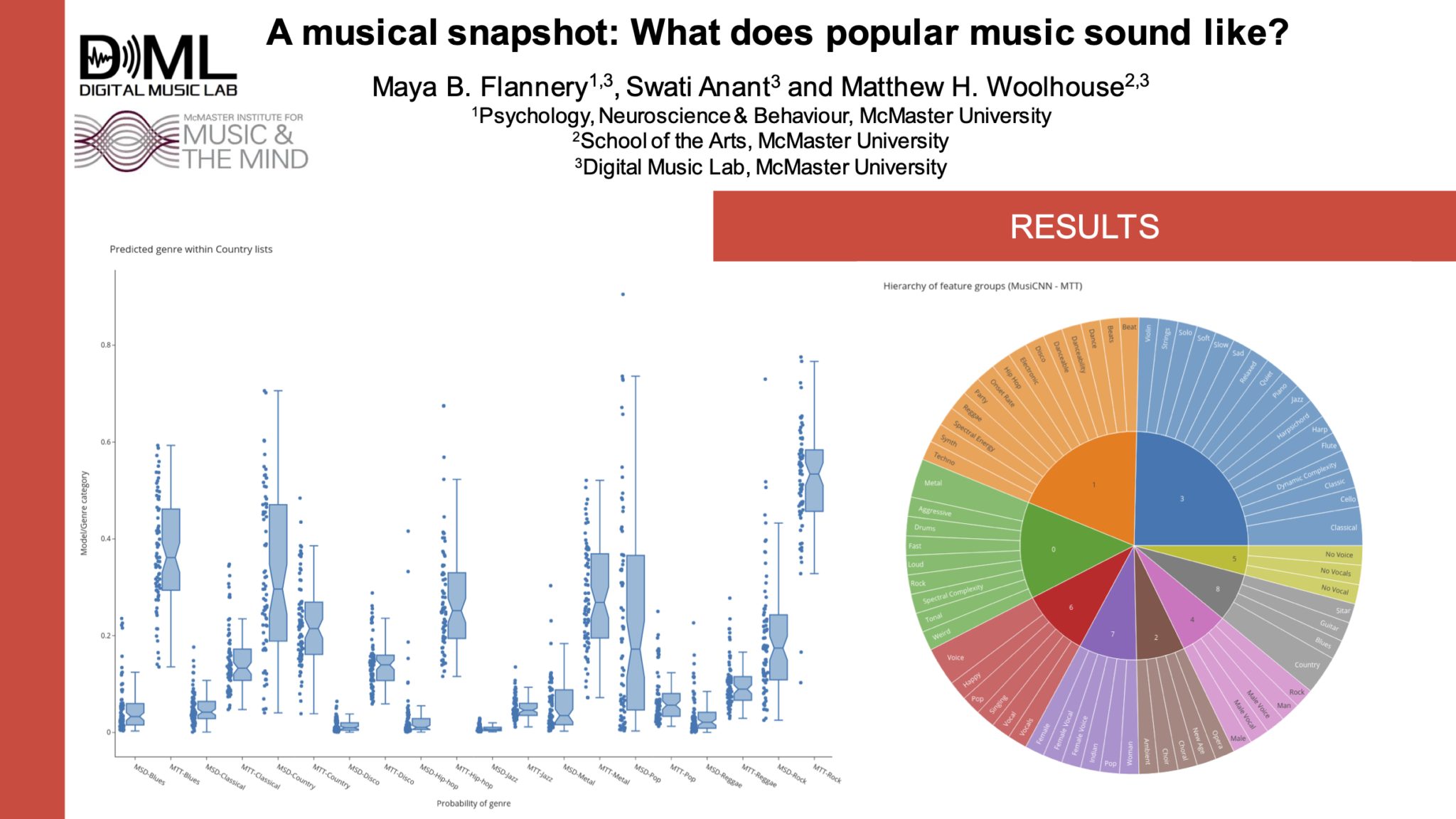

Flannery, M., Anant, S. & Woolhouse, M. H. A musical snapshot: What does popular music sound like? Poster at 17th Annual NeuroMusic Conference. McMaster University, Canada, 20 November 2021.

Abstract:

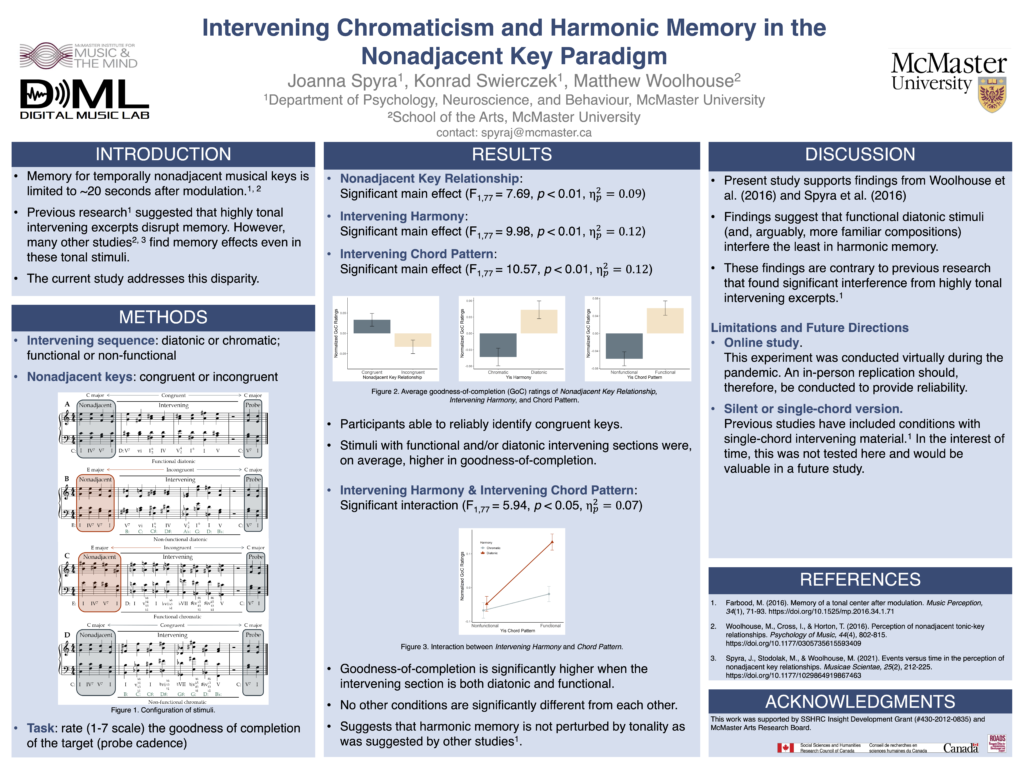

The music preferences of individuals have been linked to personality. Differences between individuals are often determined by an established measure of personality, such as the Big Five Inventory (BFI), while differences in music have been less consistently characterized. For example, research has found that preference is linked to music categorized by genres (e.g., Pop and Country; Zweigenhaft, 2008), music-preference dimensions (e.g., reflective and complex; Rentfrow & Gosling, 2003), and musical attributes (e.g., intense and mellow; Greenberg et al., 2016). While these studies provide insight into the musical choices of individuals, differences in music categorization methods have produced inconsistent and thus difficult to interpret results. The present study aims to classify music by music-related acoustic features, such as tempo and register, that are readily perceived. Such features are objectively quantifiable, easily defined, and can be manipulated within short musical excerpts. We hypothesize that the link between music preference and personality operates at the level of these types of acoustic features and to a lesser extent at broader categorization levels. Three short piano excerpts (from: Bach, C# Major Prelude; Mozart, Sonata No.9; and Beethoven, Sonata No.1) were systematically manipulated by dynamic, mode, register, and tempo, which resulted in 48 unique stimuli (3x2x2x2x2). Participants (N = 90) completed the 44-item BFI then listened to each musical stimulus and rated their preference on a scale from 0 (dislike) to 100 (like). Mixed-factorial ANOVAs, with personality traits as between-subject factors and music acoustic features as within-subject factors, were analyzed for their effects related to music-preference. The personality factor of Agreeableness and the music acoustic features of Dynamic, Mode, Piece, and Tempo were significantly related to preference ratings. Furthermore, the personality factors of Consciousness, Extraversion, Neuroticism, and Openness interacted with music acoustic features. For example, individuals high in Extraversion rated forte-dynamic stimuli disproportionately higher than individuals low in Extraversion. Our results support the link between personality, music acoustic features, and music preference. Moreover, our results provide additional context with which to interpret previous literature; we discuss the potential relationships between genre, music-dimensions and musical attributes with specific features of music.

Greenberg, D. M., Kosinski, M., Stillwell, D. J., Monteiro, B. L., Levitin, D. J., & Rentfrow, P. J. (2016). The song is you: Preferences for musical attribute dimensions reflect personality.<em> Social Psychological and Personality Science</em>, <em>7</em>(6), 597–605. https://doi.org/10.1177/1948550616641473

Rentfrow, P. J., & Gosling, S. D. (2003). The Do Re Mi’s of Everyday Life: The Structure and personality correlates of music preferences. <em>Journal of Personality and Social Psychology</em>, <em>84</em>(6), 1236–56. https://doi.org/10.1037/0022-3514.84.6.1236

Zweigenhaft, R. L. (2008). A Do Re Mi Encore: A closer look at the personality correlates of music preferences. <em>Journal of Individual Differences</em>, <em>29</em>(1), 45–55. https://doi.org/10.1027/1614-0001.29.1.45

Poster:

Citations:

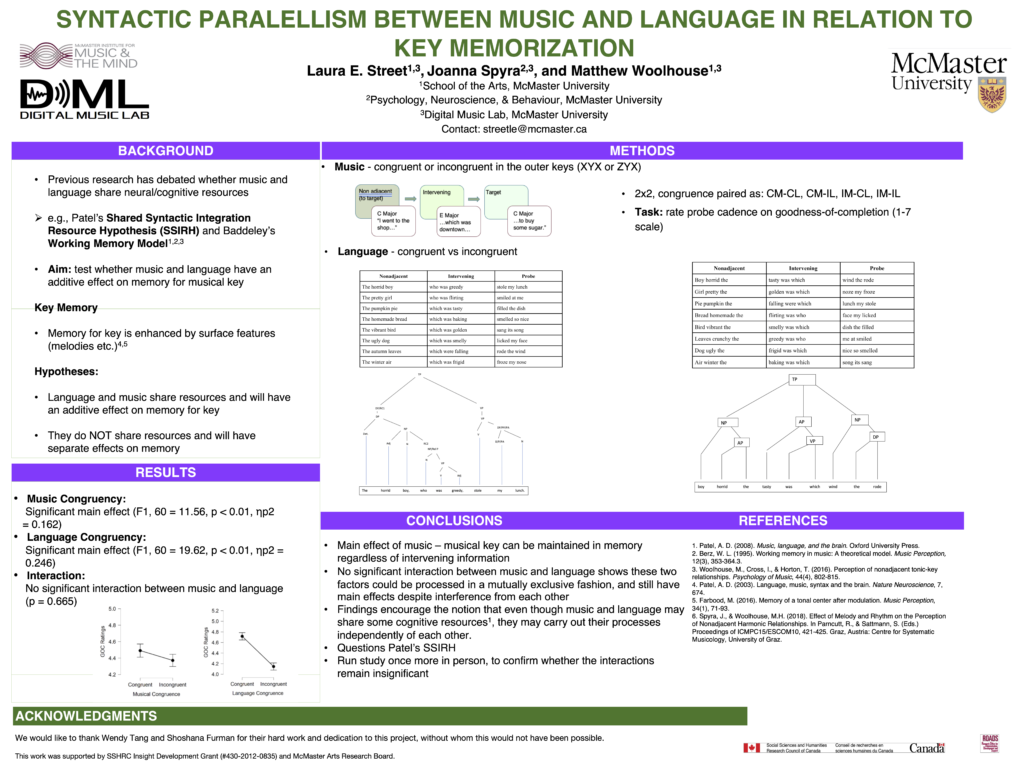

Street, L. E., Spyra, J., & Woolhouse, M. H. Syntactic parallelism between music and language in relation to key memorization. Poster at ICMPC16/ESCOM16 (online). University of Sheffield, United Kingdom, 28 July-3 August 2021.

Street, L., Spyra, J. & Woolhouse, M. H. Syntactic parallelism between music and language in relation to key memorization. Poster at 16th Annual NeuroMusic Conference, McMaster University, Canada, 14 November 2020

Abstract:

Music and language occur as complex and ‘meaningful’ auditory patterns in human communication (Patel, 2008). In recent cognitive science, the syntactic parallelisms existing between these two domains have been compared and examined. For example, Slevc et al. (2009) examined language processing by manipulating the syntactic demands in language and music. Participants read “garden-path” sentences that were accompanied by a musical chord, which together created coherent chord progression. Garden- path effects were most enhanced when syntactically unexpected words occurred with harmonically unexpected chords, while no garden-path effect was elicited when unexpected words, or critical chords violated timbral expectancy alone (Slevc et al., 2009). These results help support the prediction of the shared syntactic integration resource hypothesis (Patel, 2003), suggesting that music and language rely on a common storage of limited processing resources that form incoming elements into syntactic structures. Previous research, however, did not examine language and music in relation to memory. The current study develops and expands on this aspect by determining whether the perceived grammatical similarities between music and language are mutually exclusive in the memory domain. The experiment was designed such that congruency in language and in music could be separately juxtaposed. Congruent and incongruent sentences (semantically and syntactically correct or incorrect, respectively) were written with embedded clauses. Mimicking this, music stimuli were composed of three musical sections: a nonadjacent key-establishing sequence, an intervening section, and a probe cadence. Congruent musical phrases featured matching outer sections in ABA (key) formatting, while incongruent conditions move through three different keys in CBA formatting. In a 2×2 design, musical and linguistic congruency was paired as follows: (1) congruent music with congruent language; (2) congruent music with incongruent language; (3) incongruent music with congruent language; and (4) incongruent music with incongruent language. Each of these sequences finished with a probe cadence and were rated for goodness-of-completion by participants. There was a significant main effect of music congruency (F1, 60 = 11.56, p < 0.01, ηp 2 = 0.162) where congruent music was rated higher on average than incongruent music. There was also a significant main effect of language congruency (F1, 60 = 19.62, p < 0.01, ηp

2 = 0.246) where congruence was rated higher than incongruence. However, there was no significant interaction between them (p = 0.665). The main effect of music strongly suggests that participants are able to maintain a musical key in memory, despite intervening information. As the sung voice was arguably the most salient factor in musical stimuli, it is little surprising that congruency had such a significant effect on completion ratings. Nonadjacent key relationships reached significance, despite the saliency of the linguistic factor. This suggests that these two factors can be processed independently from each other. This teaches us something about memory, gives insight into how one should compose for certain desired effects, and supports the idea that although these domains may share some cognitive resource (as found in neuroscience research), they may be processed separately. This finding puts into question Patel’s shared syntactic integration resource hypothesis, which would have been supported had there been an interaction between these two domains (Patel, 2003).

Patel, A. D. (2003). Language, music, syntax and the brain. Nature neuroscience, 6(7), 674-681.

Patel, A. D. (2010). Music, language, and the brain. Oxford university press.

Slevc, L. R., Rosenberg, J. C., & Patel, A. D. (2009). Making psycholinguistics musical: Self-paced reading time evidence for shared processing of linguistic and musical syntax. Psychonomic bulletin & review, 16(2), 374-381.

Poster:

Click here to download PowerPoint (including sound files)

Citations:

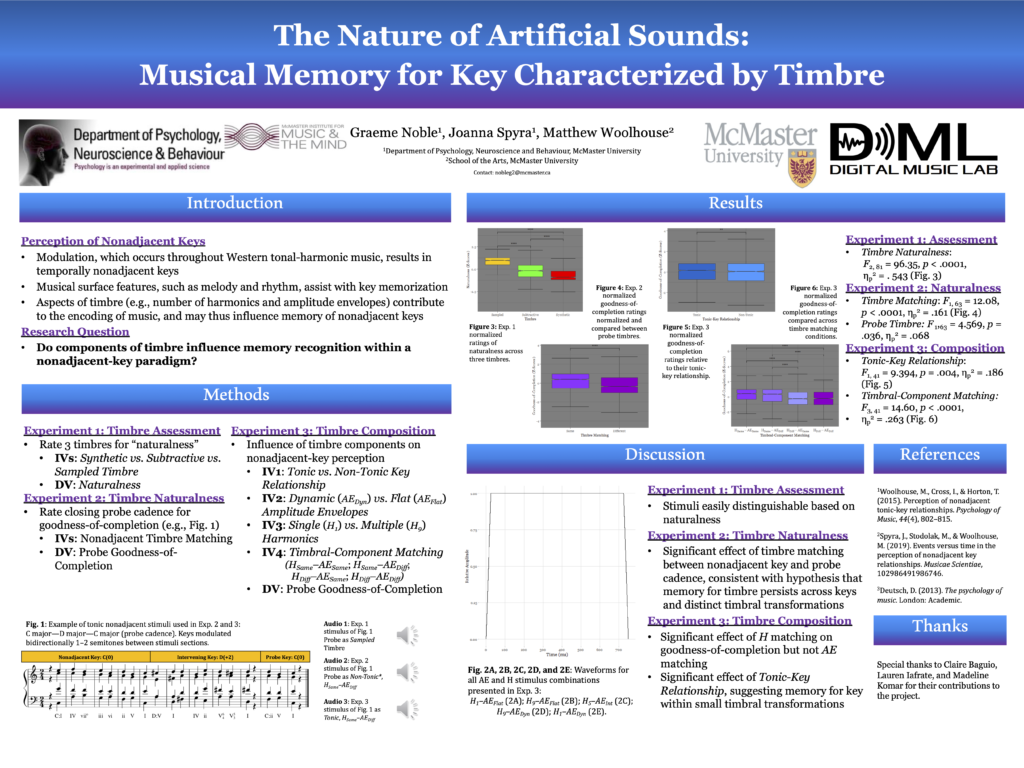

Noble, G., Spyra, J. & Woolhouse, M. H. The nature of artificial sounds: Musical memory for key characterized by timbre. Poster at ICMPC16/ESCOM16 (online). University of Sheffield, United Kingdom, 28 July-3 August 2021.

Abstract:

Previous research has investigated the extent to which an initial key is retained in short-term memory following a musical modulation (e.g., Farbood, 2016; Woolhouse, Horton, & Cross, 2016). While literature has covered several distinct aspects of music and their effects on memory (e.g., Spyra & Woolhouse, 2018), the contribution of timbre has yet to be studied with such scrutiny. Timbre (the ‘tone-colour’ of a sound) refers to the perception of overlayed pure-tone components with independent amplitude envelopes (AEs) varying in duration and intensity (Deutsch, 2013) that create the illusion of a unified sound. The current study deconstructed aspects of timbre with respect to number of harmonics (H#) and AE shape. In Experiment 1, participants were asked to distinguish between three timbres constructed with varying degrees of ‘naturalness’. In Experiment 2, the sounds from Experiment 1 sought to address whether timbral naturalness impacted key recognition. Lastly, Experiment 3 explored the extent to which individual components of timbre, H# or AE shape, influenced key memorization. Participants in Experiment 1 were asked to rate the naturalness of sampled vs. subtractive vs. synthesized sounds in a three-chord probe cadence (ii-V-I) in multiple keys. The subtractive timbre was constructed as a fast Fourier transform of a sampled piano sound, in which inharmonic and upper harmonic elements were omitted, functioning as an intermediate juxtaposition between sampled and additively synthesized (flat AE–pure tone) timbres. In Experiment 2, the three timbres described above were systematically distributed across 3 consecutive major-key stimulus segments using a nonadjacency paradigm: (1) an initial 8-chord sequence; (2) an intervening, 8-chord modulated key sequence, followed by a 1-beat rest; and (3) a probe cadence (selected from Experiment 1), sequentially nonadjacent to the initial key. The timbre of the initial segment and probe cadence either matched or differed from each other, with timbres consisting of sampled audio, and/or a pure tone with a flat AE; the intervening sequence consistently used the subtractive timbre. Using the same nonadjacency paradigm in Experiment 3, AEs and H# were manipulated independently such that the initial segment and probe cadence were played with flat or dynamic AEs and either H1 or H9. The intervening sequence had a timbre of intermediate complexity (H5; quasi-dynamic AE). Participants in Experiments 2 and 3 provided goodness-of-completion ratings for the probe cadences using a 7-point Likert-type sliding scale. Experiment 1 yielded higher ratings of naturalness for sampled audio over subtractive over additive synthesized sounds (F2,81 = 96.35, p < .001, ηp2 = .543), with stable ratings across pitch height transformations in all timbres except subtractive. In Experiment 2, participants’ ratings of goodness-of-completion were greatest when the probe cadence used a timbre that matched that of the nonadjacent segment (F 1,63 = 12.08, p < .001, ηp2 = .161) and possessed a natural quality (F 1,63 = 4.569, p = .036, ηp2 = .068). Experiment 3 revealed a significant effect of tonic-key relationship between the initial segment and probe cadence, independent of timbral manipulation (F 1,41 = 9.394, p = .004, ηp2 = .186). Participants’ judgement of goodness-of-completion was highest when the probe cadence’s timbre matched that of the initial segment (F 3,41 = 14.60, p < .001, ηp2 = .263). Pairwise comparisons for Timbral-Component Matching revealed a significant contribution of H# (p < .001), but not of AE (p > .1). The results of all three experiments demonstrate the importance of timbre for the perception of musical form involving distinct nonadjacent sections. Once the groundwork was laid in Experiment 1, Experiment 2 conveyed the significance of timbral matching between nonadjacent sections within a piece of music. Irrespective of nonadjacent timbre type, timbral matching was rated higher than non-matching for each stimulus. Experiment 3 showed that the contributions of harmonic components overshadowed the nonadjacent memory effects of AE shape. Participants consistently preferred stimuli that returned to the original key, despite timbral changes.

Deutsch, D. (Ed.). (2013). Psychology of music (pp. 26–58). Elsevier.

Farbood, M. (2016). Memory of a tonal center after modulation. Music Perception, 34(1), 71–93. doi:10.1525/mp.2016.34.1.71

Spyra, J., & Woolhouse, M.H. (2018). Effect of melody and rhythm on the perception of nonadjacent harmonic relationships. In Parncutt, R., & Sattmann, S. (Eds.) Proceedings of ICMPC15/ESCOM10, 421–425. Graz, Austria: Centre for Systematic Musicology, Univ. of Graz.

Woolhouse, M., Cross, I., & Horton, T. (2016). Perception of nonadjacent tonic-key relationships. Psychology of Music, 44(4), 802–815. doi:10.1177/0305735615593409

Poster:

Citations:

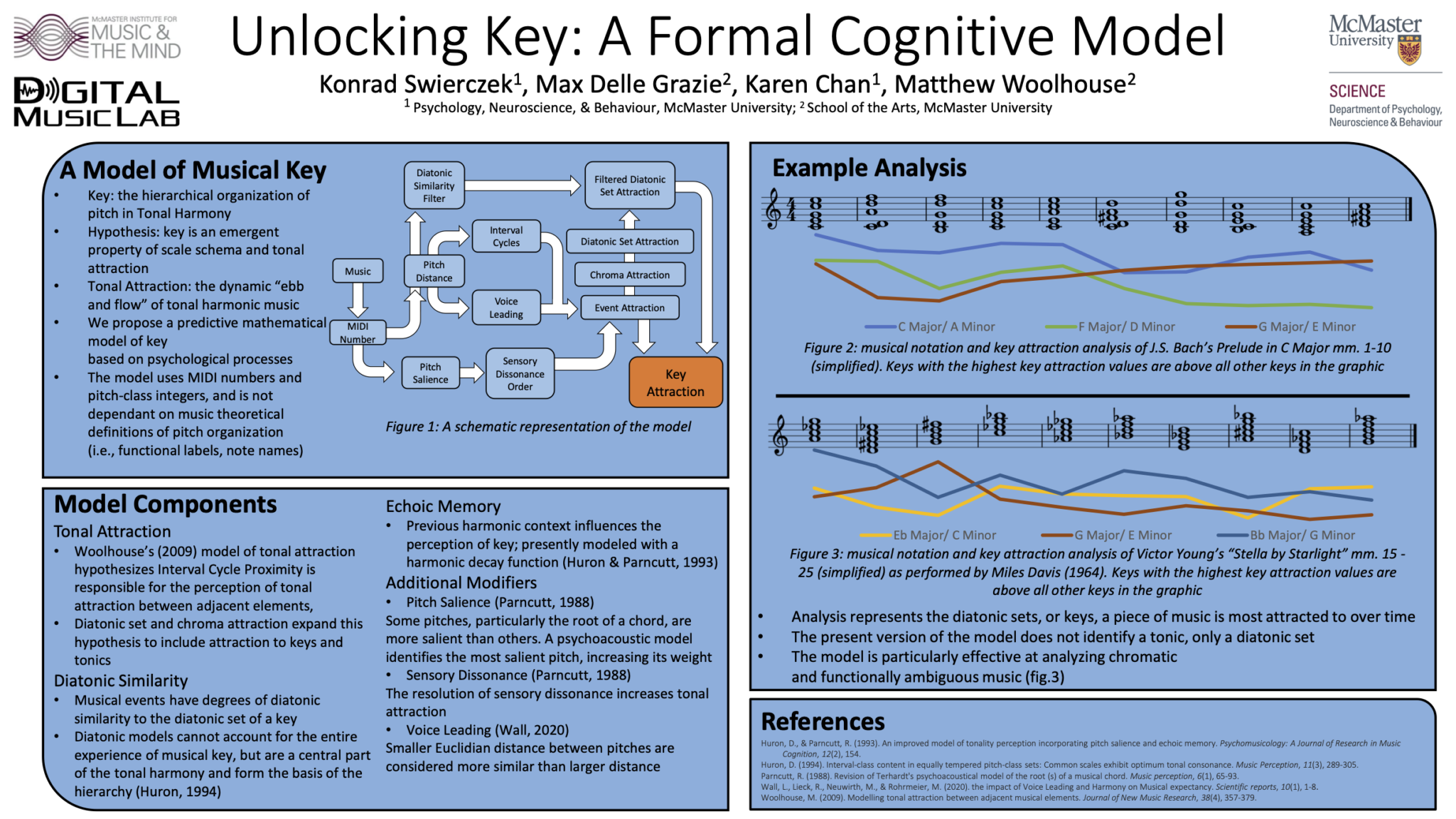

Swierczek, K., Delle Grazie, M., Chan, K. & Woolhouse, M. H. Unlocking key: A formal cognitive model. Poster at 16th Annual NeuroMusic Conference, McMaster University, Canada, 14 November 2020.

Abstract:

Musical key, the hierarchical organization of pitch in a piece of music, is a central part of the perception of Western tonal harmony. Even listeners without formal musical training are adept at detecting key, along with its referential pitch, or tonic. Traditional music theory and some recent psychological models of musical key tend to focus on diatonic set membership, determining key by evaluating what diatonic set best fits a piece of music. However, since the 19th century, western tonal harmonic music has grown to include complex harmonic features such as chromaticism (the use of notes outside of the diatonic set) and the more frequent use of modulation (changes in key). Given that these compositional techniques are often used in popular music up to the present day and often do not inhibit the perception of key, we hypothesize that tonal attraction (the perception of pull, push, tension, momentum, and inertia in music) plays a role in the perceptual determination of a musical key. The proposed formal model of key uses a combination of Interval Cycle Proximity (a measure of tonal attraction), the rare intervals hypothesis (diatonic set membership), short term memory, pitch salience, and sensory dissonance to predict the perception of key across a piece of music by encultured listeners. The predictions of the model will change our understanding of musical structure and provide new insights into previously ambiguous music as well as compositional techniques.

Poster:

Citations:

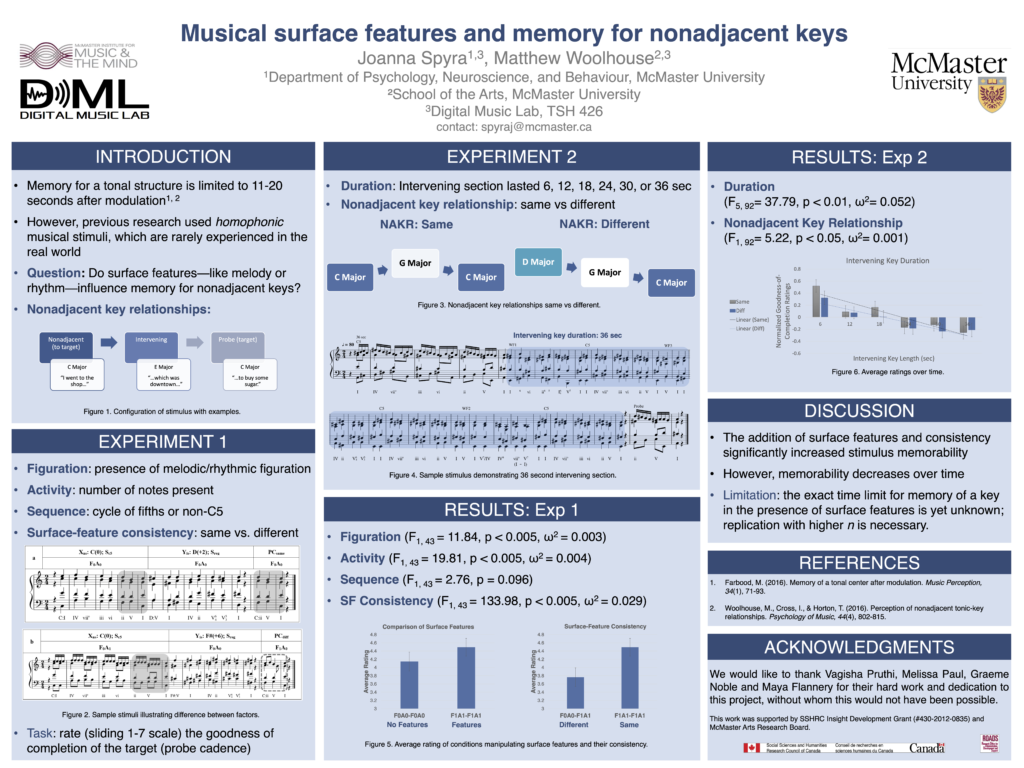

Spyra, J. & Woolhouse, M. H. Musical surface features and memory for nonadjacent keys. Poster at 16th Annual NeuroMusic Conference, McMaster University, Canada, 14 November 2020.

Abstract:

Music cognition experiments often use homophonic stimuli which are well controlled but have arguably low generalizability. In the current study, memory for an original musical key was tested by juxtaposing homophonic stimuli to those with two additional melodic surface features: Figuration, i.e. melodic decoration; and Activity, i.e. melodic business. Stimuli consisted of three sections: a key establishing nonadjacent section (X ns ), a modulation to an intervening section (Y is ), and a probe cadence in the key of X ns (X pc ) which was rated by participants for “goodness of completion”. Figuration was operationalized as the addition to X ns of melodic and rhythmic figuration—such as neighbour tones and suspensions. Activity was manipulated by adding repeated notes—for example, eighth notes instead of quarter notes. Lastly, in a following experiment, the duration of Y is was extended to last between 6-36 seconds. Both Figuration and Activity had significant main effects in which the presence of each surface feature was rated higher, on average, than their absence. Y is Duration also reached significance; average ratings decreased as the duration of Y is increased. Results suggest that memory for nonadjacent keys presented with salient features is stronger. However, this memory decays over time, the full extent of which is, as yet, unclear.

Poster:

Citations:

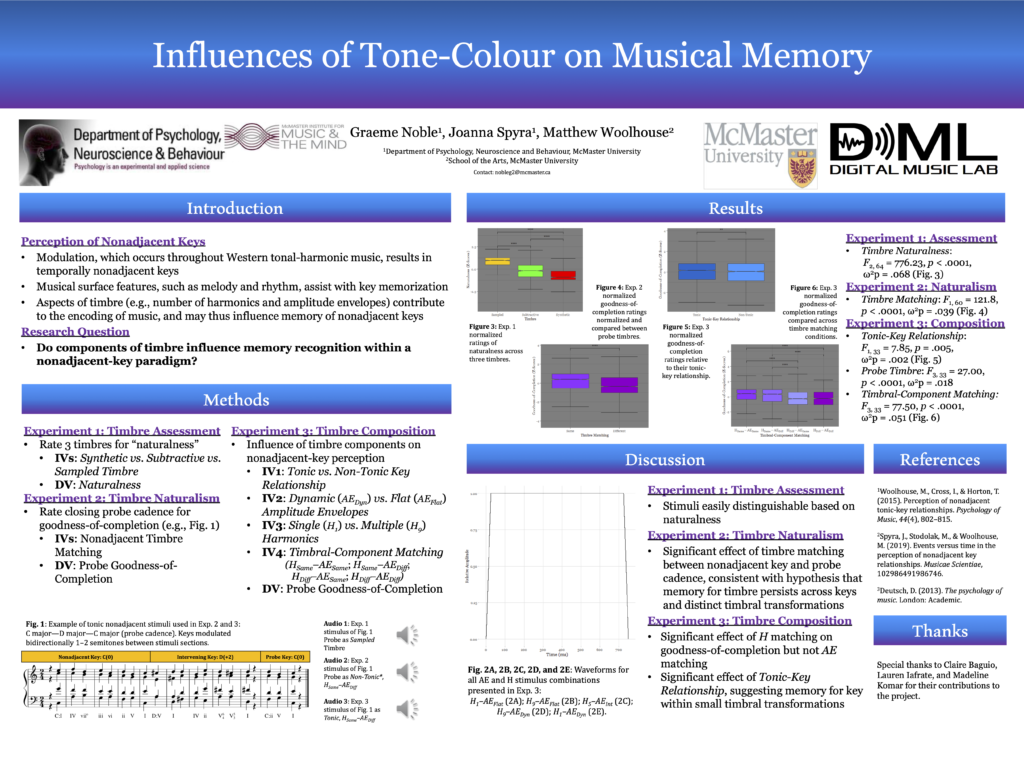

Noble, G., Spyra, J. & Woolhouse, M. H. Influences of tone-colour on musical memory. Poster at 16th Annual NeuroMusic Conference, McMaster University, Canada, 14 November 2020.

Abstract:

Previous research has investigated the extent to which an initial key is retained in short-term memory following a musical modulation. While literature has covered several distinct aspects of music and their effects on memory, the contribution of timbre has yet to be studied with such scrutiny. Timbre (the ‘tone-colour’ of a sound) consists of pure-tone components with independent amplitude envelopes (AEs) varying in duration and intensity. The current study explored these two aspects of timbre by manipulating number of harmonics and AE shape. Participants in Experiment 1 were asked to rate the naturalness of sampled vs. subtractive vs. additive synthesized sounds. In Experiment 2, the sounds from Experiment 1 were interjected into a modulating passage to address whether timbral naturalness impacted key memorization. Using the sequences from Experiment 2, Experiment 3 explored the extent to which individual components of timbre influenced key memorization. Here, AEs and the number of harmonics were manipulated independently such that the initial segment and probe cadence were played with flat or dynamic AEs and either 1 or 9 harmonics. In summary, stimuli were easily and appropriately distinguishable for their subjective naturalness. Furthermore, participants’ judgements of completion were greatest for probe cadences with a natural timbre and those that matched the timbre of the initial segment. Ratings were significantly higher when the initial segment and probe cadence were matched for harmonics, rather than their AEs. We thus conclude that the harmonic component of timbre within our stimuli was critical with respect to observed memory effects.

Poster:

Citations:

Flannery, M. B. & Woolhouse, M. H. Introducing MAFs: Music acoustic features and their relationship to personality. Poster at 16th Annual NeuroMusic Conference, McMaster University, Canada, 14 November 2020.

Abstract:

Music preference is usually investigated by associating personality factors with various attributes such as genre (e.g., Classical, Pop), musical-preference dimensions (e.g., “Reflective and Complex”, “Intense and Rebellious”), and psychological attributes (e.g., happy, sad). Although such classifications may be informative with regard to the everyday language we use to describe music, they are weak predictors of the acoustic qualities of the music we prefer. In other words, the link between personality and preference for musical styles is not specific. The present study addressed this issue with the measurement of personality factors (using the 44-item Big Five Inventory) and preference ratings of musical stimuli that were systematically varied with what we describe as Music Acoustic Features (MAFs). The manipulation of MAFs within the experiment afforded us a high degree of control over the stimuli, whilst maintaining musical ecological validity. Participants (N = 90) listened to three piano excerpts (Piece) that were varied by the MAFs Dynamic, Mode, Register, and Tempo, resulting in 48 stimuli (3x2x2x2x2). After listening to each stimulus, preference was rated on a scale from 0 (dislike) to 100 (like). Results of mixed factorial ANOVAs (personality traits as between-subject factors, MAFs as within- subject factors) showed main effects of the personality factor Agreeableness, and the MAFs Dynamic, Mode, Piece, and Tempo. Furthermore, interactions between a number of personality factors and MAFs were significant. For example, people with high Neuroticism disproportionately preferred fast tempo music. Our results establish new insights regarding the relationship between personality and specific features of music.

Poster:

Citations:

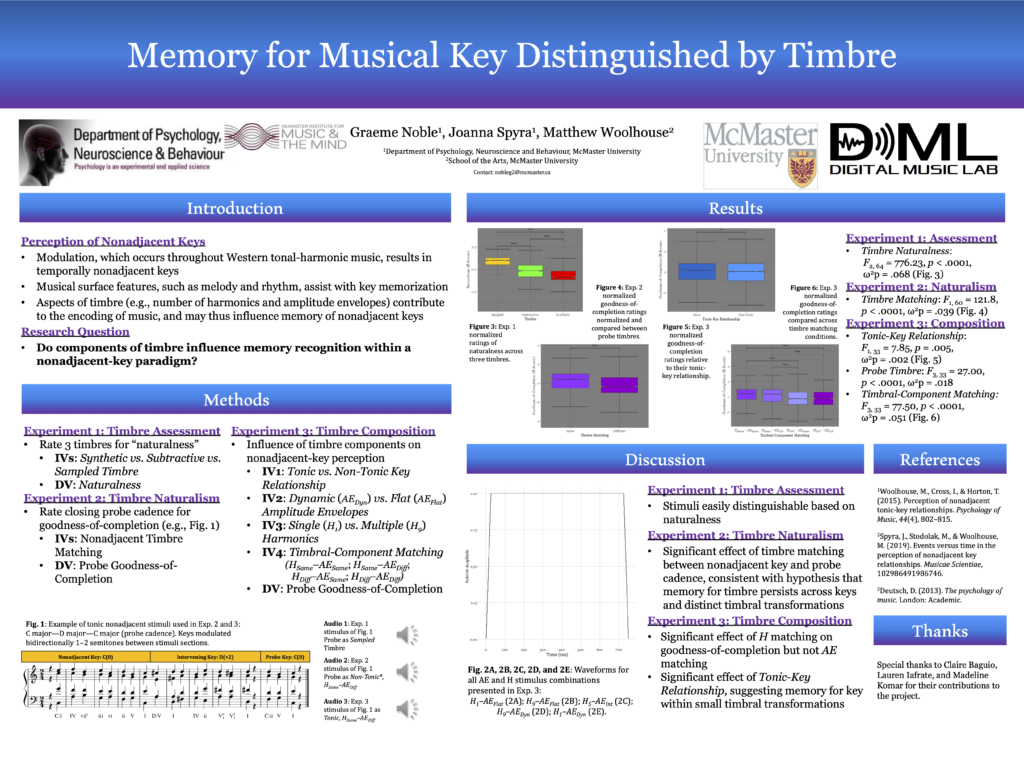

Noble, G., Spyra, J., & Woolhouse, M. H. Memory for musical key distinguished by timbre. Poster at 2nd International Conference on Timbre (Timbre 2020), (online). Thessaloniki, Greece, 3-4 September 2020.

Abstract:

This study operationalized timbre as consisting of pure-tone components with independent amplitude envelopes (AEs) varying in duration and intensity. The study explored these two aspects of timbre with respect to number of harmonics and AE shape. To validate an underlying assumption of the study—that artificial qualities of sounds are distinguishable—participants in Experiment 1 were asked to distinguish between three timbres constructed with varying degrees of ‘naturalness’. In Experiment 2, the sounds from Experiment 1 were used to address whether timbral naturalness impacted key memorization. Using the sequences from Experiment 2, Experiment 3 explored the extent to which individual components of timbre influenced key memorization. Three hypotheses underpinned the study: (1) that timbres are distinguishable in terms of naturalness (Experiment 1); (2) that matching timbres between nonadjacent sections elicit higher goodness-of-completion ratings (Experiment 2); and (3) that distinct elements of timbre, as operationalized above, elicit different ratings (Experiment 3). Data from Experiment 1 were consistent with the hypothesis: higher ratings of naturalness were obtained for sampled audio over subtractive over additive synthesized sounds (F2, 64 = 776.23, p < .0001, ω2 p = .068). These observations were consistent across pitch height transformations. In Experiment 2, participants’ judgement of goodness-of-completion was greatest when the probe cadence used a timbre that matched that of the nonadjacent segment (F1, 60 = 121.8, p < .0001, ω2 p = .039). Results from Experiment 3 revealed a significant effect of tonic-key relationship between the initial segment and probe cadence, independent of timbral manipulation, (F1, 33 = 7.85, p = .005, ω2 p = .002). Participants’ judgement of goodness-of-completion was greatest when the probe cadence used the most natural timbre (F3, 33 = 27.00, p < .0001, ω2 p = .018) or a matching timbre to the initial segment, (F3, 33 = 77.50, p < .0001, ω2 p = .051). The results of all three experiments demonstrate the importance of timbre for the perception of musical form involving discrete, nonadjacent sections.

Poster:

Citations:

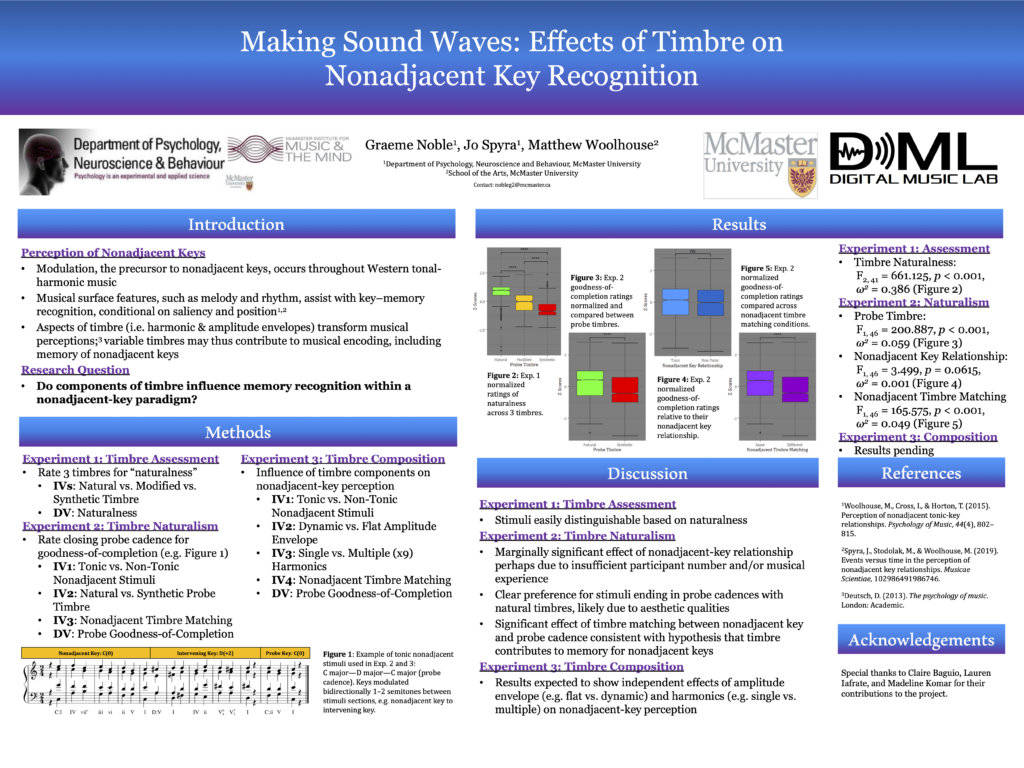

Noble, G., Spyra, J. & Woolhouse, M. H. Making sound waves: Effects of timbre on nonadjacent key recognition. Poster at 15th Annual NeuroMusic Conference, McMaster University, Canada, 9 November 2019.

Abstract:

Previous research suggests a positive effect of diverse musical surface features (e.g. melodic figuration) on nonadjacent key recognition and memorization. However, the effect of timbre (i.e. sound ‘colour’) has evaded scrutiny. Timbre, often considered the complexity of a tone, consists of a mixture of frequencies, amplitudes and amplitude envelopes, varying in duration and intensity. The current study operationalized timbre as the synthesis of amplitude envelope and harmonic structure. In Experiment 1, we focused on the naturalism of synthesized instruments across sampled, subtractive, and additive synthesis sound sources. Experiment 2 sought to address how individual components of timbre uniquely impact key memorization. Timbral qualities were distributed amongst three distinct stimulus segments: a traditional major-key sequence, constituting the nonadjacent key; an intervening-key sequence; and a probe cadence that either returned to the original key or repeated the intervening-key modulation. In Experiment 1, nonadjacent-probe pairs either matched or differed in holistic timbral complexity. However, in Experiment 2, amplitude envelope and harmonic number were manipulated independently of one another. After each probe sequence, participants provided a comprehensive goodness-of-completion rating through a 7-point Likert-type sliding scale. While data collection is ongoing, we expect positive correlations between timbral complexity and nonadjacent key memorization. Due to their close similarities to naturally generated timbre, complex spectral conditions emulate musical instruments the listener might recognize, likely heightening memory performances relative to other artificial timbres.

Poster:

Citations:

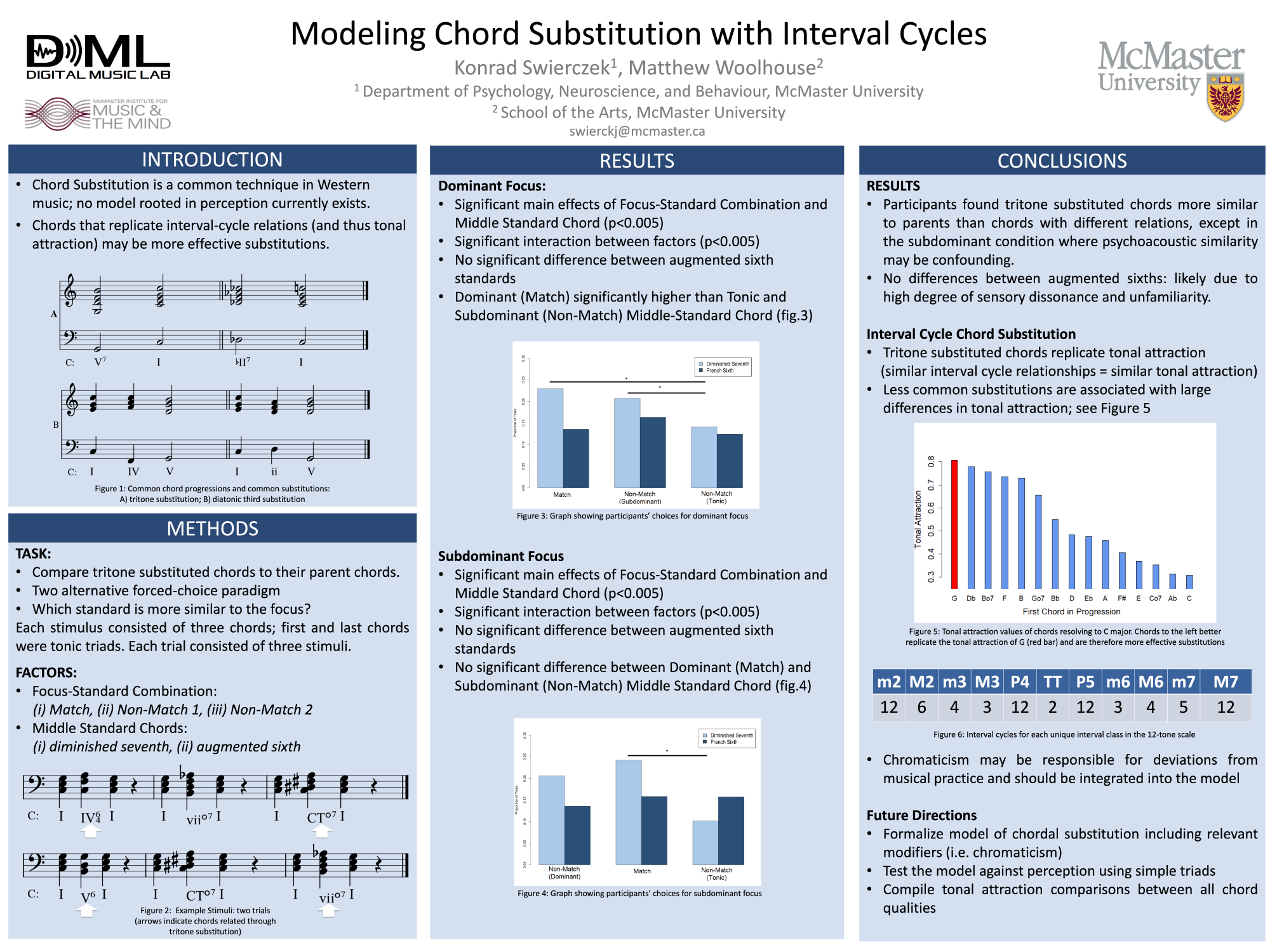

Swierczek, K. & Woolhouse, M.H. Modelling chord substitution with interval cycles. Poster at 15th Annual NeuroMusic Conference, McMaster University, Canada, 9 November 2019.

Abstract:

Chord substitution is a common compositional and improvisational device in Western tonal-harmonic music. A successfully substituted chord has the same harmonic function as its parent, but contains one or more altered (i.e. substituted) pitches. Interval cycles (IC) have been implicated as a cognitive correlate of tonal attraction (Woolhouse, 2009), and could similarly provide a framework for the perception of chord substitution. We propose a chord-substitution model in which chords with low IC relationships have a high degree of functional similarity to parent chords, while chords with high IC relationships are more functionally dissimilar to parent chords. This is consistent with musical practice: tritone substitution is one of the commonest chromatic substitutions; the tritone has a relatively low IC value of 2. In contrast, the highest IC value, 12, is associated with semitones, perfect fourths and fifths. Chords whose roots are related by these intervals are rarely associated with substitution. The predictive power of the model was tested using a two-alternative forced-choice paradigm. Participants were required to identify which of a pair of diminished-seventh chords was more closely related to a parent triad. Results showed that participants judged diminished-seventh chords related to the parent by a tritone (i.e. IC = 2) to be more similar than chords with different intervallic relationships (i.e. IC > 2). A perceptual model of chord substitution will help to clarify which substitutions are possible by virtue of perceptual similarity, and which are dissimilar and thus problematic within a tonal-harmonic context.

Poster:

Citations:

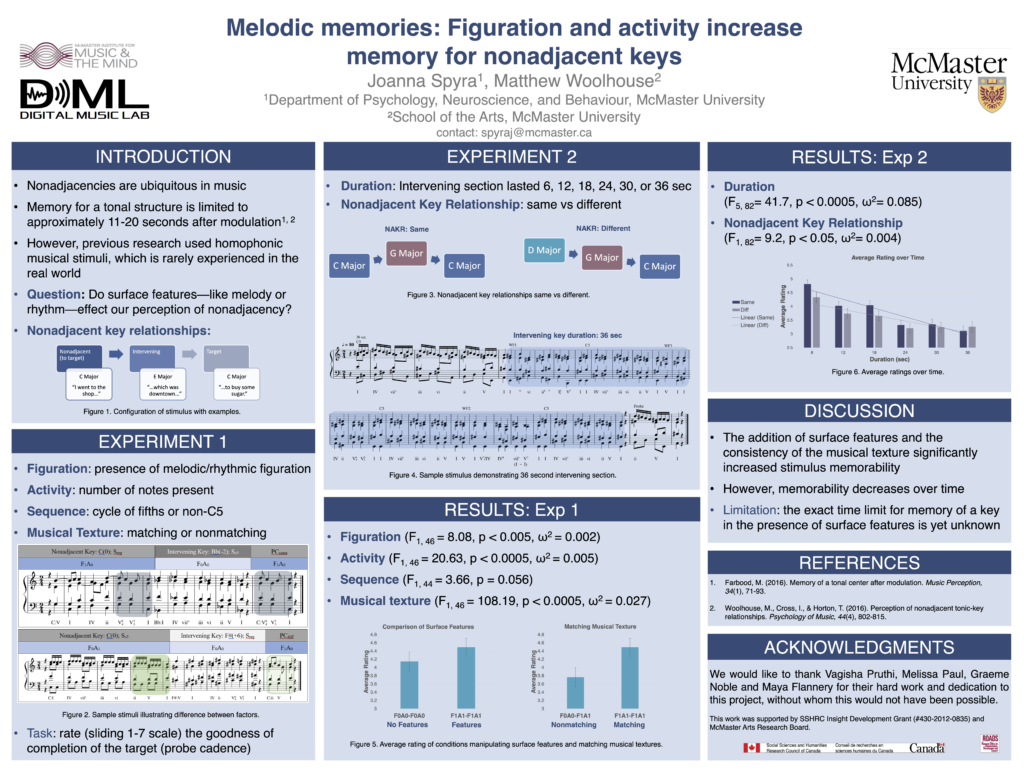

Spyra, J. & Woolhouse, M.H. Melodic memories: Figuration and activity increase memory for nonadjacent keys. Poster at 15th Annual NeuroMusic Conference, McMaster University, Canada, 9 November 2019.

Abstract:

We investigate two surface features within music that may contribute to an initial key being remembered following modulation: (1) Figuration, i.e. melodic decoration; and (2) Activity, i.e. melodic business. The stimuli were composed of three sections: X1 (key establishing sequence; tonic), Y (second key; intervening modulation), and X2 (probe cadence sequence in the key of X1, rated by participants for “goodness of completion” of the preceding phrase). Figuration was manipulated by adding melodic or rhythmic figuration, such as passing tones and/or suspensions, thereby increasing the surface complexity of the stimuli. Activity was operationalized with the addition of repeated notes (e.g. eighth notes instead of quarter notes). The effects of these factors were examined by comparing their presence or absence within the stimuli. Significant main effects of both Figuration (F1,44 = 6.08, p = 0.01) and Activity (F1,44 = 27.40, p < 0.005) were found. There was also a significant main effect of intervening-key duration (F5,92 = 38, p < 0.01), where average ratings decreased as the duration of the intervening modulation increased. These results suggest that listeners form stronger memories for nonadjacent keys when they are presented with salient surface features, but that this memory decreases over time. In sum, the study provides evidence that the addition of surface features such as melodic figuration and rhythmic activity prolongs memory for an initial key following modulation, although the full extent of this effect is as yet unclear.

Poster:

Citations:

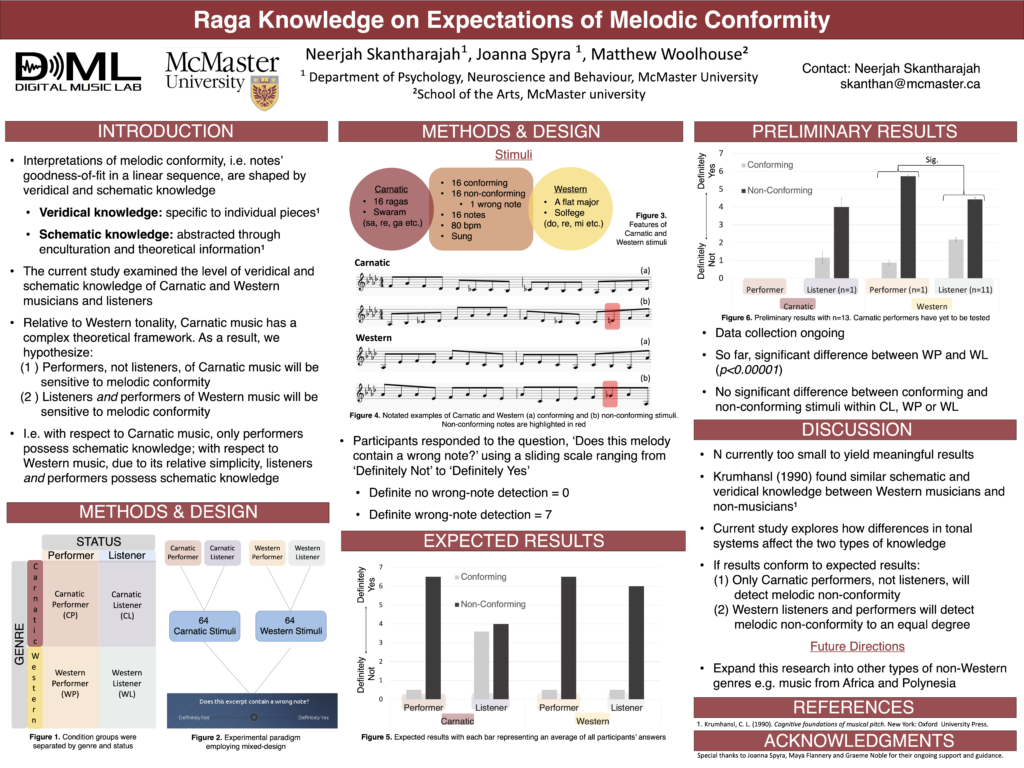

Skantharajah, N. & Woolhouse, M. H. Quantifying Karnāṭaka: Raga knowledge on expectations of melodic conformity. Poster at Society for Music Perception and Cognition (SMPC) Conference, New York, USA, 5-7 August 2019.

Abstract:

Interpretations of melodic conformity, i.e. the goodness of fit of notes within a linear sequence, are shaped by veridical and schematic knowledge. Previous studies have found similarities in schematic knowledge between Western musicians and non-musicians (Krumhansl, 1990). Arguably, this can be accounted for by the relatively constrained nature of Western tonality: the more-or-less exclusive use of two modes enables non-musicians to schematically learn Western tonality through enculturation, including in infancy. In contrast to Western tonality, South Indian classical music has a relatively complex theoretical framework, employing over a 100 unique ragas, or scales. Given this complexity, the current study attempted to uncover the presence or absence of schematic knowledge within Carnatic and Western music using 4 groups: Carnatic performers and listeners, and Western performers and listeners. 2 sets of novel melodic stimuli were created; one for Carnatic participants, the other for Western participants. The stimuli were further subdivided into those conforming to the raga/mode and those that were non-conforming due to the presence of a single wrong note, i.e. an out-of-scale tone. Stimuli were 6-seconds long, sung recordings containing 16 notes at 80bpm. Participants were asked if the stimuli contained a wrong note and answered using a 5 point-scale ranging from “definitely” to “definitely not.” We hypothesized (1) that Carnatic performers would have significantly more correct responses than Carnatic listeners, and (2) that there would be relatively little difference between Western performers and listeners. Full results are pending; however, if confirmed, this outcome will suggest that within a Carnatic context, schematic knowledge for melodic conformity is possessed only by performers, i.e. experts, whereas in the Western context, both performers and listeners are sensitive to melodic conformity, i.e. both groups have schematic knowledge. We assume that this is due to the relatively complex theoretical underpinnings of Carnatic music.

Krumhansl, C. L. (1990). Cognitive foundations of musical pitch. New York: Oxford University Press.

Poster:

Citations:

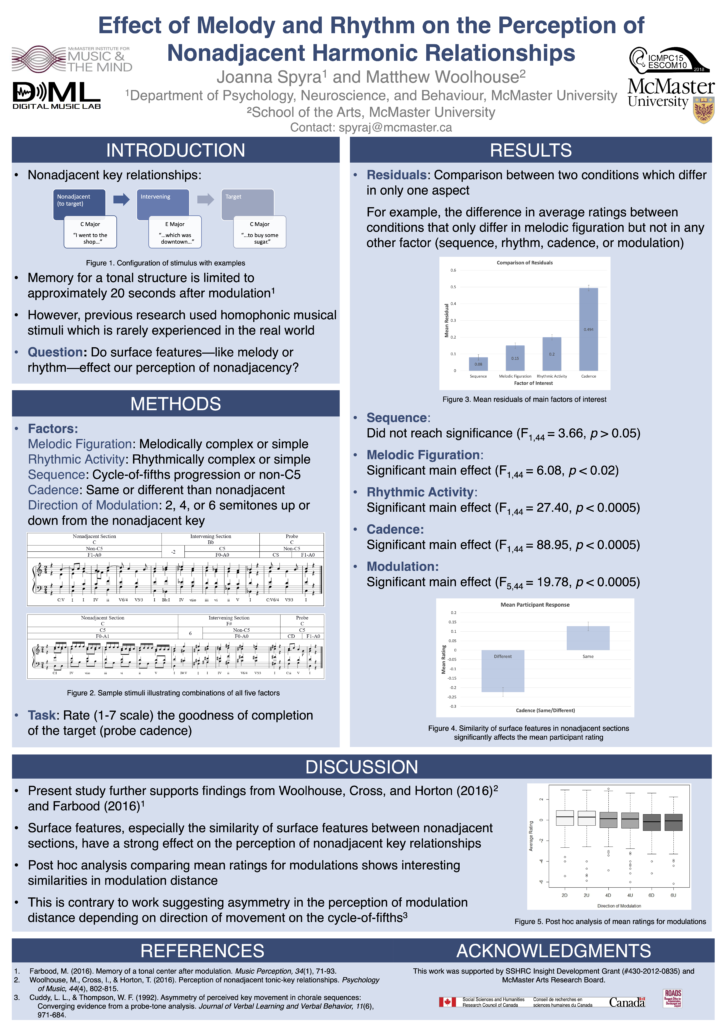

Spyra, J. & Woolhouse, M. H. Effect of melody and rhythm on the perception of nonadjacent harmonic relationships. Poster at 15th International Conference on Music Perception & Cognition. Montréal, Canada, 23-28 July 2018

Abstract:

Temporally nonadjacent key relationships are ubiquitous within in tonal-harmonic music. However, although theoretically posited as being important, the degree to which they are perceived beyond relatively short durations is uncertain. Using a stimulus-matching paradigm, Woolhouse et al. (2016) found that increasing the duration of an intervening key to ca 12s overwrote the memory of the original, nonadjacent key. Farbood (2016) found that the memory for a key remains active for 20s after modulation. In contrast, the findings of Cook (1987), who carried out similar experiments using repertoire pieces, suggests that the perception of large-scale tonal structures could extend up to a minute. The stimuli of Woolhouse and Farbood were limited to the harmonic domain: in Woolhouse et al. (2016), the textures were homophonic; in Farbood (2016), participants listened to repeating arpeggios. Yet the use of real excerpts by Cook (1987), and the extended effects he obtained, suggests that musical features, in addition to harmony, may be important in maintaining nonadjacent key relationships. The current study aimed to investigate this in a controlled manner by manipulating specific features of the musical surface, and testing the effect of these manipulations on global harmonic perceptions. Two music-theoretically defined features were tested: melodic figurations (e.g. chordal skips and passing tones) and rhythmic figurations (e.g. anticipations and suspensions). The method uses the stimulus-matching paradigm of Woolhouse et al. (2016), in which the influence of a particular manipulation (in this case, the musical surface) is observed by pairing two stimuli, identically matched except for feature under investigation. Concluding each stimuli is a probe cadence, rated by participants for goodness-of-closure (of the previous phrase). The overall form of the stimuli is: A1 (key establishing sequence), B (second key), and A2 (probe cadence having a tonic relationship to A1). We investigate the effects of the musical surface from two perspectives: (1) whether the presence of melodic and rhythmic figurations increases the affect of A1 (the key nonadjacent to the probe cadence); and (2) whether their presence in B (the second key) affects the extent to which A1 (the original, nonadjacent key) is overwritten, i.e. erased from memory. Full results are pending; however, initial findings suggest that the presence of musical-surface features in A1 significantly increases participants’ rating of A2, the probe cadence, i.e. the retention in memory of A1 is enhanced. In contrast, somewhat unexpectedly, the inclusion of melodic and rhythmic figurations within the second key, B, did not adversely affect the influence of A1. Our provisional results support the notion that surface musical features contribute to the establishment and maintenance of temporally nonadjacent key relationship within tonal harmonic music.

Cook, N. (1987). The perception of large-scale tonal closure. Music Perception, 5(2), 197-206.

Farbood, M. (2016). Memory of a tonal center after modulation. Music Perception, 34(1), 71-93.

Woolhouse, M., Cross, I., & Horton, T. (2016). Perception of nonadjacent tonic-key relationships. Psychology of Music, 44(4), 802-815.

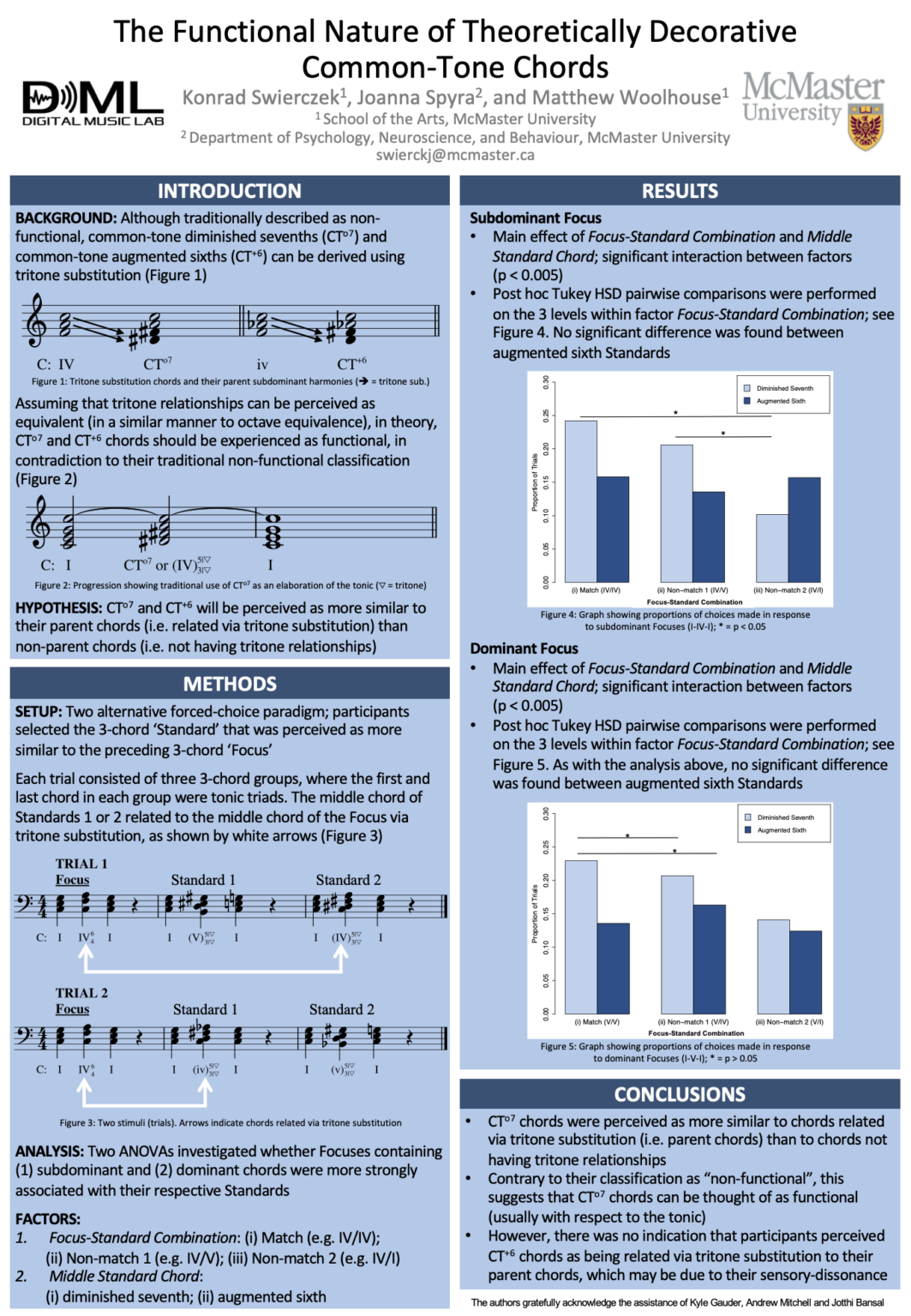

Poster:

Citations:

Swierczek, K., Spyra, J. & Woolhouse, M. H. The functional nature of theoretically non-functional diminished seventh chords. Poster at 15th International Conference on Music Perception & Cognition. Montréal, Canada, 23-28 July 2018

Abstract:

A goal of music theory is to classify patterns in music in order to understand the structure of music. While these principles quite often coincide with perception, as is arguably the case with mixture and applied dominants, complex cases require more nuanced explanations. The non-dominant diminished seventh, or common-tone diminished seventh (CTo7), is such an example, being typically interpreted as non-functional decorative harmony (Piston, 1978), despite its use within otherwise well-formed musical phrases. More generally, the ambiguous nature of diminished seventh chords presents an obstacle for theoretical classification. Through the principal of “tritone substitution” of tones belonging to the subdominant harmony, we identify the CTo7 as a predominant chord, relative to the key region of the common tone. In the same way that the subdominant can approach the dominant or return to the tonic, this theory relates CTo7 to its dominant enharmonic equivalents. In order to test this theoretical notion, this study aims to connect the CTo7 with its related subdominant harmony through a psychological experiment, testing the validity of the theory against perception. If our conjecture is correct, results will indicate how the three identities of diminished seventh chords (tonic, dominant, subdominant) behave functionally despite their ambiguous nature. The three possible (enharmonically spelt) diminished seventh chords are related to simple diatonic tonic-predominant and tonic-dominant structures using a two alternative forced choice paradigm. Participants select one of two diminished seventh chord progressions, which they deem to be most similar to the presented stimulus. For instance, when presented a tonic-predominant progression, we hypothesize the participant will select CTo7 over CTo7/V due to its tritone relationship with the subdominant. The effect of mode and voicing are controlled for due to their potential confounding nature. Data analysis is currently underway. We expect participants to choose the CTo7 over CTo7/V or the dominant diminished seventh (viio7) when the stimulus presented is tonic-subdominant. This would confirm that the CTo7 is indeed perceptually and therefore functionally related to the subdominant harmony by tritone substitution, in addition to sharing a greater number of chord tones.

Poster:

Citations:

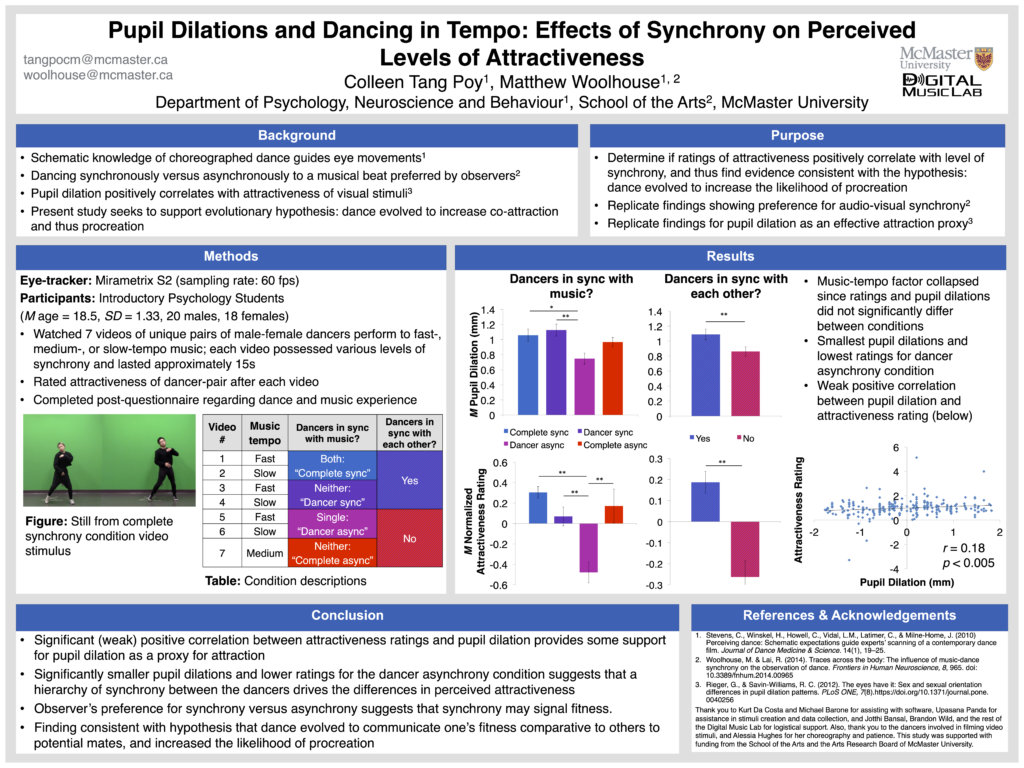

Woolhouse, M. H. & Tang Poy, C. Effect of dance synchrony on perceived levels of attractiveness: a pupillary dilation study. Poster at Conference on Music and Eye-Tracking. Max Planck Institute for Empirical Aesthetics, Frankfurt, Germany, 17-18 August 2017.

Tang Poy, C., Panda, U., & Woolhouse, M. H. Effect of dance synchrony on perceived levels of attractiveness: a pupillary dilation study. Poster at Society for Music Perception and Cognition Conference. San Diego, USA, 30 July-3 August 2017.

Abstract:

A significant amount of research has sought to link human entrainment and dance with social bonding, affiliation and communication. Consistent with this research, eye-movement experiments have shown increased gaze times for people observing dancers moving to a synchronous musical beat versus asynchronous, suggesting that there is a preference for audio-visual congruence, particularly with respect to dance. In contrast to bonding and socially motivated research, this study sought to investigate a complementary but alternative evolutionary-adaptive explanation for the geographical and historical ubiquity of dance: namely, that dance evolved to increase the likelihood of procreation. In this eye-tracking study, participants watched seven choreographed dance videos, each with a different level of synchrony—pairs of dancers performed to fast-, medium-, or slow-tempo music, and each dancer performed fast or slow choreography. This resulted in the following conditions: (1) dancers and music completely synchronous; (2) only one dancer synchronous with music; (3) dancers synchronous with each other but not with music; and (4) dancers and mu- sic completely asynchronous. While participants watched the videos, eye-movements, pupil dilations, and subjective ratings of attractiveness were recorded. Results showed that synchronous dancers were perceived as more attractive (according to pupil dilations and attractiveness ratings). However, significant differences between only some of the conditions suggests that a hierarchy of synchrony between the dancers drove differences in perceived attractiveness; e.g. lower attractiveness ratings were obtained for Condition 3 (only one dancer synchronous with music) than Condition 4 (complete asynchrony). This study contributes to a more nuanced understanding of dance’s possible evolutionary origins, and provides further evidence that pupil dilation can be used as a proxy for attraction.

Poster:

Citations:

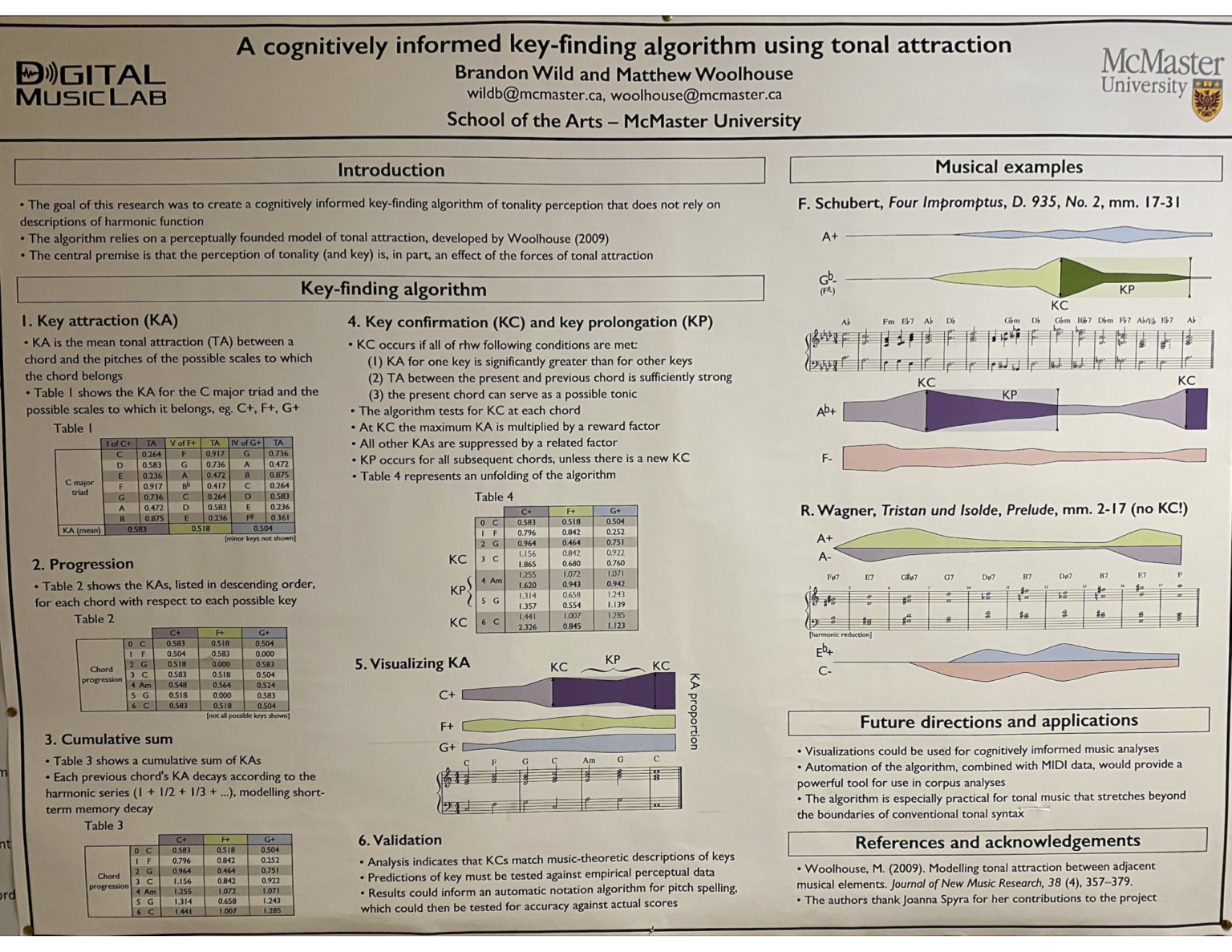

Wild, B. & Woolhouse, M. H. A cognitively informed key-finding algorithm using tonal attraction. Poster at Society for Music Perception and Cognition Conference. San Diego, USA, 30 July-3 August 2017.

Abstract:

The goal of this research was to create a cognitively informed key-finding algorithm of tonality perception that does not rely on descriptions of harmonic function borrowed from music theory. The central premise of the algorithm is that the perception of tonal-harmonic music is, in part, an effect of the forces of “tonal attraction” at play in a musical composition. As a result, the algorithm relies on a perceptually founded model of tonal attraction, developed by Woolhouse (2009). The algorithm accepts as its input a harmonic progression and then calculates the tonal attraction of each constituent harmonic element towards any possible key. As the algorithm steps through the progression, a running sum of attraction determines the tonality (i.e. the tonal location towards which the chords are most strongly attracted). The chord-by-chord nature of the algorithm makes it possible to produce visualizations that demonstrate the emerging tonal properties of a given musical sequence as it unfolds over time. The key-finding algorithm has been applied to numerous chord sequences drawn from repertoire of the Western common practice period. Thus far it has performed successfully when tested against conventional music-theoretic descriptions of key. Future research will test the algorithm’s predictions against empirically gathered perceptual data. In addition to furthering our understanding of tonality perception, it is expected that the key- finding algorithm will be applicable to cognitively informed music analysis, and, given its independence from symbolic notation, in automatic transcription algorithms.

Poster:

Citations:

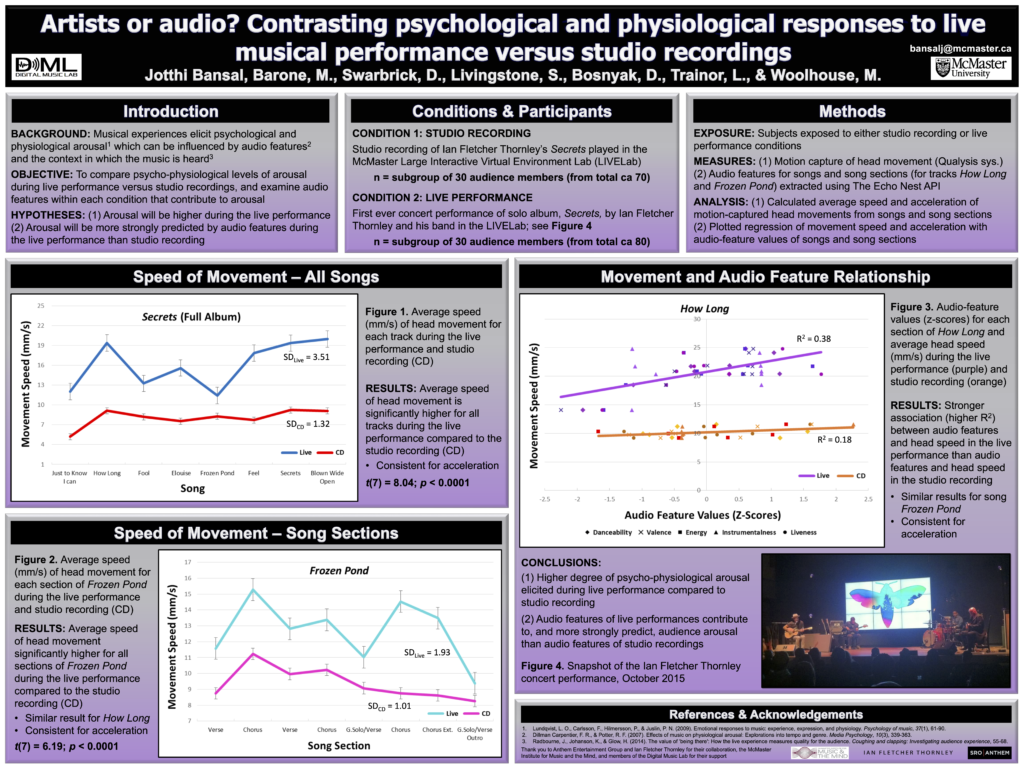

Bansal, J., Barone, M., Swarbrick, D., Livingstone, S. Trainor, L. & Woolhouse, M. H. Artists or audio? Contrasting psychological and physiological responses to audio recordings versus live musical performance. Poster at Society for Music Perception and Cognition Conference. San Diego, USA, 30 July-3 August 2017.

Abstract:

Research exploring live musical performance has found substantial effects of performer presence on psychological arousal. We investigated this effect in the context of an album-launch event for Canadian rock artist Ian Fletcher Thornley. The event, which took place in McMaster University’s Large Interactive Virtual Environment auditorium, featured an experiment investigating psychological and physiological responses to an audio recording versus live musical performance. 80 participants were exposed to either (1) a studio recording of Thornley’s Secrets, presented through an active acoustic system, or (2) a live concert, containing the same musical material as the studio recording, performed by Thornley and his band. Before and after each session, subjective psychological data was collected via a Likert-type survey. Throughout each session, the head movements of 60 participants (ca 30 per session) were recorded using a passive- marker infrared motion-capture system. Analysis of the motion-capture data revealed a greater degree of head movement during the live performance, indicating increased psycho-physiological arousal. Subsequent analysis aimed to uncover the acoustic features associated with the higher level of movement during the live performance. Using EchoNest, an application program interface through which acoustic features (e.g. danceability, valence, tempo, and energy) can be extracted from uploaded audio, tracks from Thornley’s live performance and studio album were analyzed. Simultaneous multiple regression indicated that movement (speed and acceleration) was more strongly influenced by the acoustic features in the live performance than the audio recording. This suggests that the presence of the performers in the live session had a coupling effect, linking the audio with the audience members’ movements, which we interpret as a proxy for engagement. Follow-up analysis is exploring whether, in addition to performer presence, audio characteristics are altered by musician expressivity during live performance, which may increase audience arousal. This study reveals the powerful effects of live musical performance on the mind and body of the observer.

Poster:

Citations:

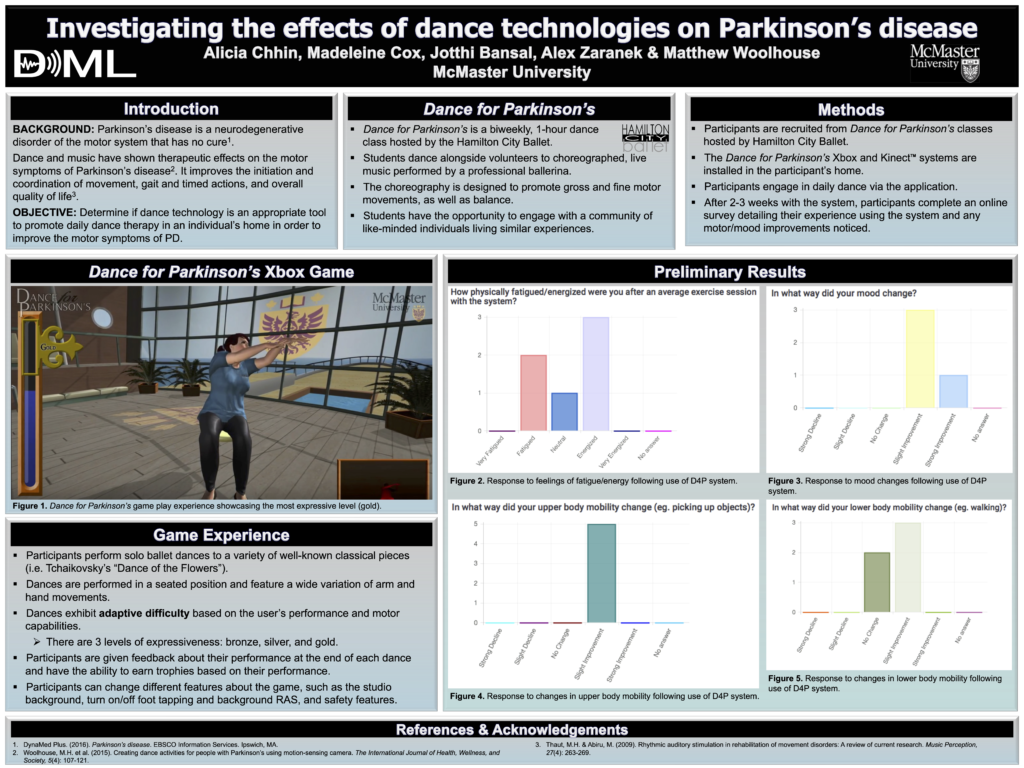

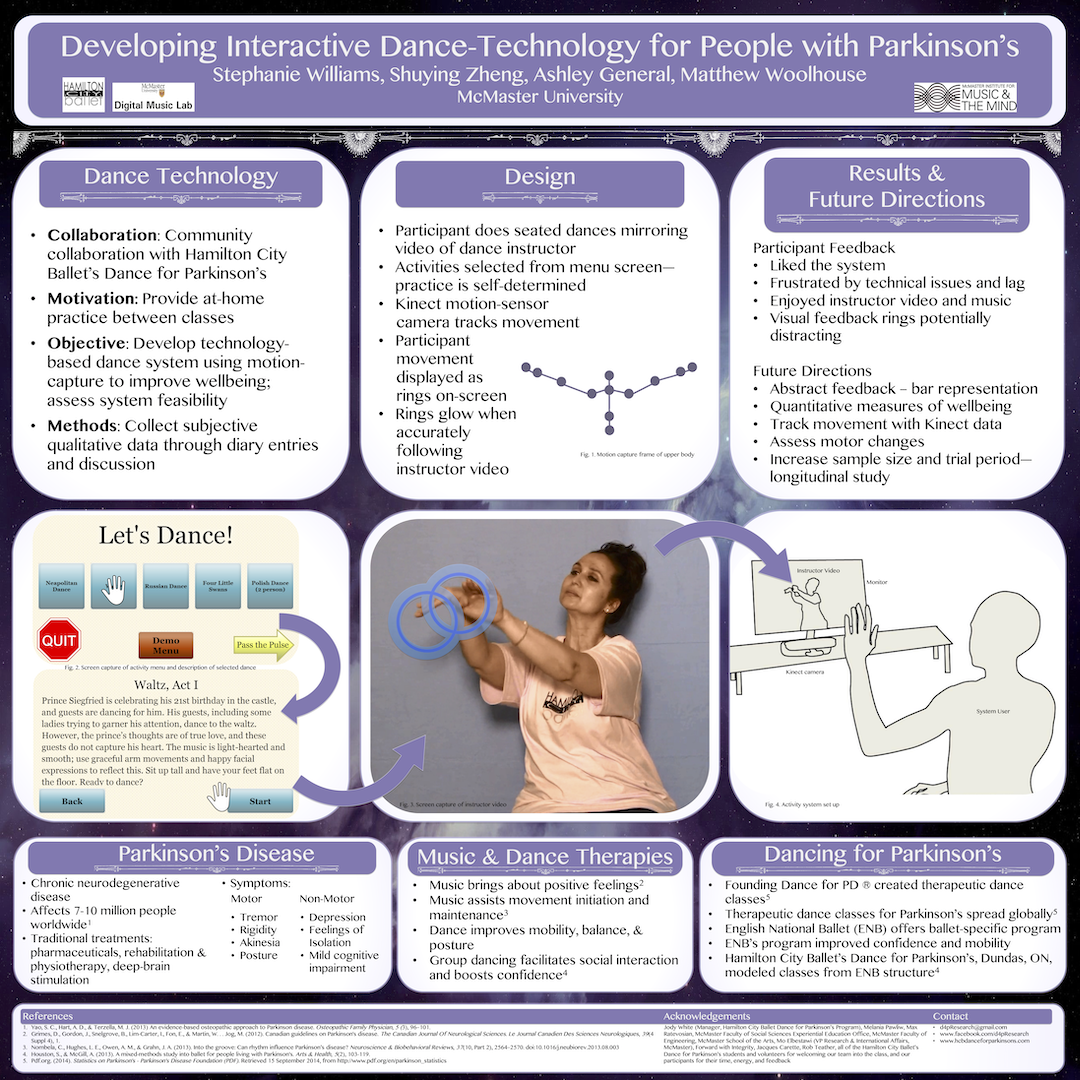

Chhin, A., Cox, M., Bansal, J., Zaranek, A. & Woolhouse, M. H. Investigating the effects of dance technologies on Parkinson’s disease. Poster at 9th Biennial Conference of the Canadian Gerontological Nursing Association. Ottawa, Canada, 4-6 May 2017.

Abstract:

Parkinson’s disease (PD) is a progressive movement disorder that affects motor control. As a complement to pharmacotherapy, dance has been found to improve motor symptoms. Dance helps individuals with PD to initiate movements, allowing for smoother, planned motions. Dance for Parkinson’s (D4P) is a dance-therapy intervention designed to assist the motor symptoms of PD. However, D4P classes run on a biweekly basis, which disrupts the potential benefits of daily dance practice. To address this concern, this study is piloting a technology-based application using the Microsoft Xbox and Kinect system, which people can use daily in the comfort of their homes. The application contains dance avatars modelled from recorded motion data of a ballet dancer. All of the dances in the application are conducted from a seated position to reduce the risk of falls, and to enable participants to use the program without caregiver’s assistance. Additionally, the application’s menu system can be navigated using simple hand motions (rather than the Xbox controller). Via the Kinect, the application observes participants’ movement and modifies the dance avatar in real-time based on the users’ movement accuracy. If the participant consistently fails to match the avatar’s movements, then the difficulty of the dance is automatically adjusted. This study will report and assess participants’ detailed feedback of their experiences with the application to determine the suitability of these types of technologies for PD populations. Future studies will measure the long-term changes in motor symptoms when using dance therapy as a palliative treatment for PD.

Poster:

Citations:

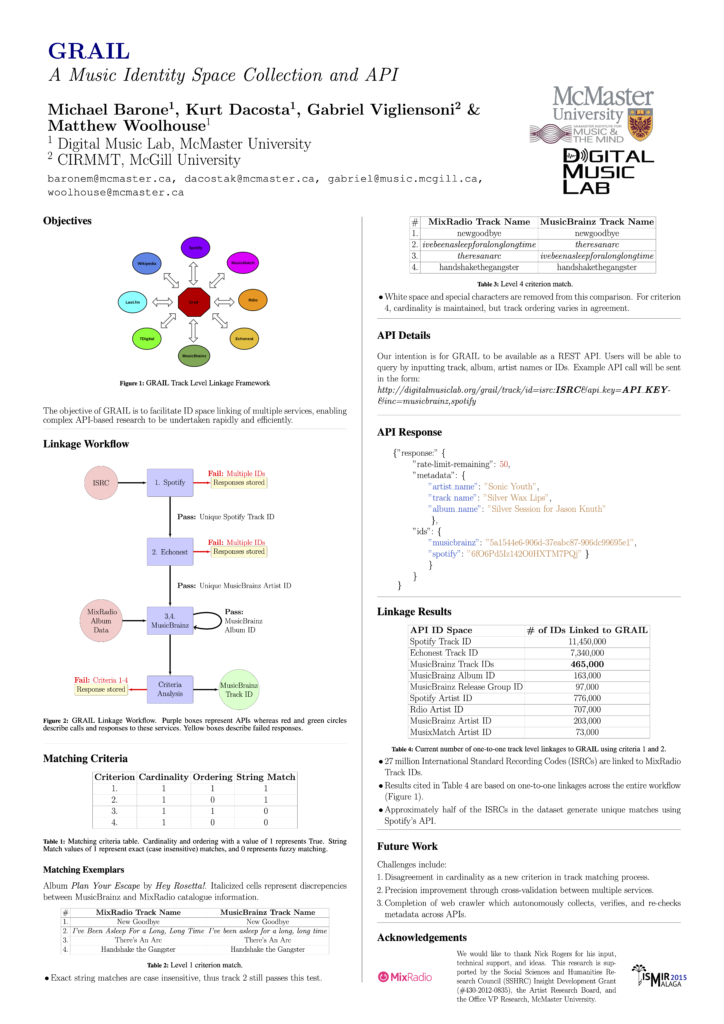

Barone, M., Dacosta, K., Vigliensoni, G., & Woolhouse, M. H. GRAIL: A General Recorded Audio Identity Linker. Poster at 3rd International Digital Libraries for Musicology Workshop (DLfM), New York, USA, 12 August 2016.

Abstract:

Linking information from multiple music databases is important for MIR because it provides a means to determine consistency of metadata between resources/services, which can help facilitate innovative product development and research. However, as yet, no open access tools exist that persistently link and validate metadata resources at the three main entities of music data: artist, release, and track. This paper introduces an open access resource which attempts to address the issue of linking information from multiple music databases. The General Recorded Audio Identity Linker (GRAIL – api.digitalmusiclab.humanities.mcmaster.ca) is a music metadata ID-linking API that: i) connects International Standard Recording Codes (ISRCs) to music metadata IDs from services such as MusicBrainz, Spotify, and Last.FM; ii) provides these ID linkages as a publicly available resource; iii) confirms linkage accuracy using continuous metadata crawling from music-service APIs; and iv) derives consistency values (CV) for linkages by means of a set of quantifiable criteria. To date, more than 35M tracks, 8M releases, and 900K artists from 16 services have been ingested into GRAIL. We discuss the challenges faced in past attempts to link music metadata, the methods and rationale which we adopted in order to construct GRAIL and to ensure it remains updated with validated information.

Poster:

Citations:

Barone, M., Dacosta, K., Vigliensoni, G., & Woolhouse, M. H. GRAIL: A music metadata identity API. Extended abstract and poster at International Society for Music Information Retrieval Conference. New York, USA, 7-11 August 2016.

Abstract:

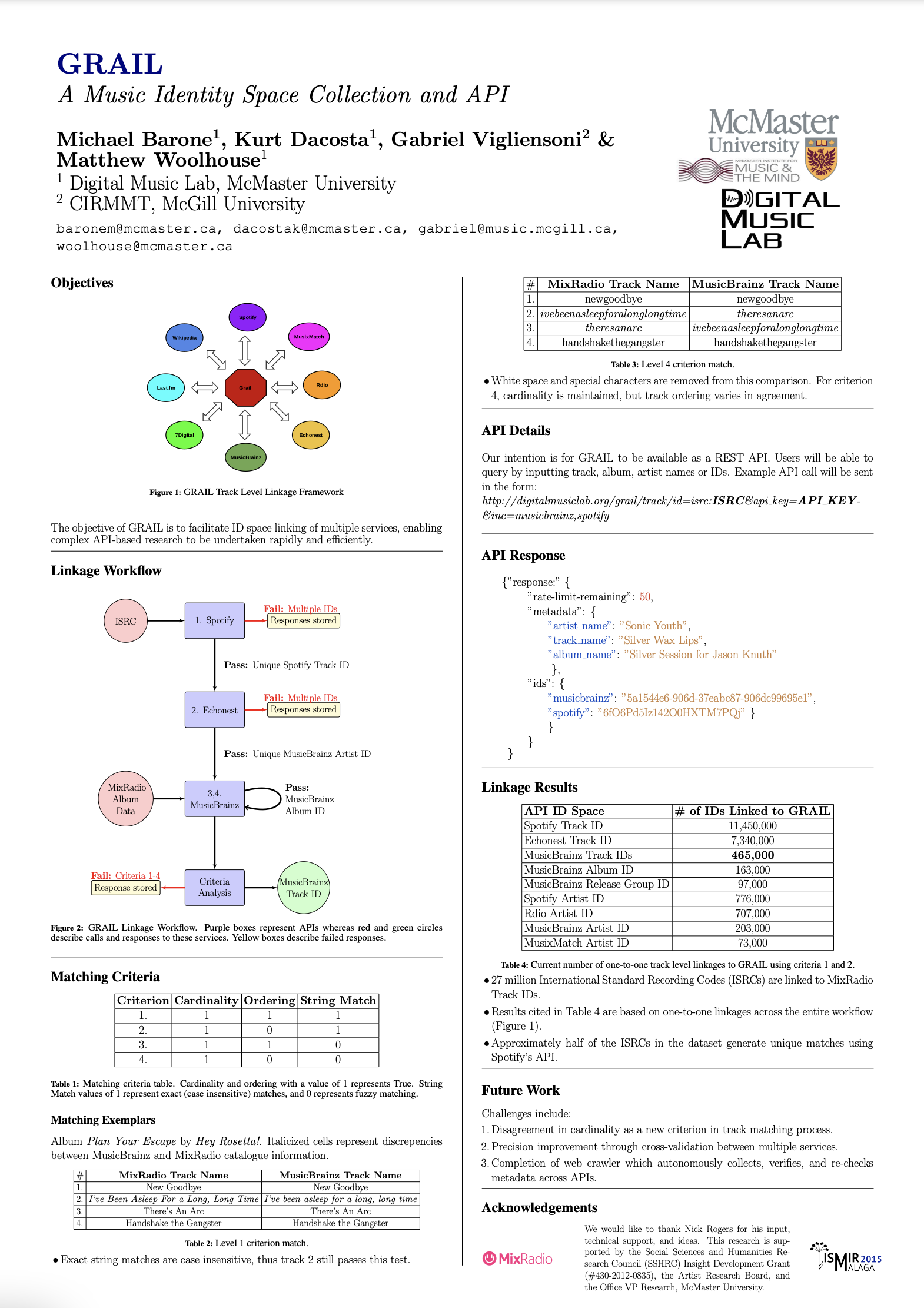

1.1 Introduction

Services such as MusicBrainz, Echonest, Last.fm, and Mus-ixMatch provide valuable access to their growing corpora of music information. Although useful to the academic community, information collected, generated, and organized by these services can be under-utilized due to a scarcity of accurate ID matches at the track level. We introduce GRAIL, an Application Program Interface (API) that links track IDs from diverse online music services using relatively strict matching criteria. GRAIL encourages traffic to these services by enabling developers and researchers to innovate through combining music APIs with relative ease. To our knowledge, there are no organized academic efforts attempting to link track IDs of digital-music services. We describe our scalable architecture and data verification process, as well as explore the challenges in collating digital-music information from disjoint resources.

As the music-research community continues to expand it use of rich data sources, for example as provided through Application Program Interfaces (API), unique opportunities for population-level research become increasingly possible. APIs can be an extremely useful resource for performing analytics relevant to virtually any major research topic within music. While accessing an API is relatively straightforward, many research questions require utilizing more than one data source to innovate and discover. For example, linguists may be interested in relating and comparing patterns within lyrics to geographic features using MusixMatch and MusicBrainz, cognitive psychologists ma-y wish to examine the accuracy of low and high-level acoustic analysis algorithms used by AcousticBrainz and Echonest, and social scientists can investigate global listening trends and social-network analysis through Last.fm and Spotify. However, despite this and other research, there is a scarcity of interdisciplinary, population-level analysis of music and consumption research due to a lack of trustworthy linkages between platforms developed by these distinct entities. The motivation for GRAIL is to overcome this obstacle, and thereby enable complex research to be undertaken rapidly without fear of inaccurate data.

2.1 Methods

As part of a 5-year data sharing agreement between MixRadio and the Digital Music Lab at McMaster University, ca. 27 million International Standard Recording Codes (ISRCs), linked to MixRadio track IDs, have been stored in a relational database. ISRCs can be considered a reliable and “atomic” track-level identifier because they are issued to tracks during the mastering process. ISRCs are atomic because they retain their identifier so long as the recording hasn’t been edited, remixed, or remastered. We match identity spaces through a combination of ISRC linkages and other commonly shared data provided by APIs. Data verification is handled through strict matching criteria, including checks of album cardinality, track ordering, and string matching of artist, album, and track titles. Identifiers were collected using Python 2.6, stored in MySQL 5.1, and hosted on Apache SOLR 5.1 for demonstration. ISRCs are matched to Spotify, Echonest, MusicBrainz, Mi-xRadio, rdio, OpenAura, and MusixMatch IDs at varying levels; our matching process is described below.

2.2 Identify Spaces

As represented in Step 1 of Figure 1, Spotify track IDs are gathered using ISRCs. Unique matches are ingested into GRAIL, and ISRCs returning multiple Spotify IDs are stored for later processing. In Step 2, the Echonest API is utilized to acquire artist-level IDs. Project Rosetta Stone was initiated by Echonest to achieve artist level matching of API identity spaces. Unique MusicBrainz artist IDs are ingested if a single ID is returned in the response. Using this process, ca. 225,000 MusicBrainz artists were linked through Echonest. In Step 3 we input MusicBrainz artist IDs and MixRadio album names into the MusicBrainz API. If MusicBrainz returns a single album, we ingest the MusicBrainz album ID into GRAIL. If multiple albums are returned, we store the MusicBrainz album group ID instead. A successful ingestion requires that there is agreement in album-track cardinality between MixRadio and MusicBrainz.

2.3 Track Matching Criteria

MixRadio metadata, including track names and ordering, are used as the basis for which MusicBrainz IDs are linked to ISRCs. In Step 4, we input successfully linked album IDs back into MusicBrainz to return a series of ordered MusicBrainz track IDs and verify that track-to-album matc-hes are accurate using 4 levels of criteria (Table 2). For all constraints, tracks must maintain case insensitive string matches. Additional conditions for constraints are described in Table 2. These conditions consider track ordering and string cleaning prior to the matching process. Each criterion loosens its restrictions slightly. Criterion 1 contains the strictest requirements, whereas Criterion 4 contains the loosest.

3.1 API Details

Our intention is that GRAIL will be available as a REST API through the url: www.digit-almusiclab.org/grail/. Users will be able to input track, album, artist names or IDs from a documented list of participating services. Data from GRA-IL will return in json or xml format. A detailed description of input parameters and example output format are outlined in our poster. GRAIL will require an API key to access data through free registration. Our plan is for API keys to have reasonable rate limits based on server restrictions. Terms of services for GRAIL include that we do not own the ID spaces we have linked, but rather the linking of ID spaces is the intellectual property. In all likelihood, the terms will state that this API is for and by the creative commons, and is designed for research with music information retrieval. GRAIL cannot be utilized for profit without direct permission of the services used in a new application.

4.1 Future Work

Future work entails continued development of data-cleaning processes, implementation of new criteria, and the execution of Criteria 3 and 4. A unique problem in track matching involves albums where track cardinality is in disagreement. If all track names and ordering are matched between data services, except for a missing track, this should be considered a strong match, but are currently not included in our analysis. In these cases, determining accuracy is problematic because ground truth is unclear. Linking these tracks based on these characteristics could be inaccurate, and requires continued refinement of matching procedures going forward. Secondly, data verification across multiple APIs will be necessary. Comparing the accuracy of metadata across APIs (such as comparing Spotify to Rdio track information) is a crucial next step that will highlight the level of agreement across the digital-music industry with respect to the information they provide to customers.

Table 1. MusicBrainz track matching criteria. Cardinality and ordering with a value of 1 represents True. String Matching of 1 represents exact matches, whereas 0 represents fuzzy matching. Criterion 1 is considered the strongest match, while criterion 4 is considered the weakest.

| Criteria | Cardinality | Ordering | String Match |

|---|---|---|---|

| 1 | 1 | 1 | 1 |

| 2 | 1 | 0 | 1 |

| 3 | 1 | 1 | 0 |

| 4 | 1 | 0 | 0 |

Table 2. Number of identity spaces successfully linked to GRAIL using Criteria 1 and 2

| API ID Space | # of IDs Linked to GRAIL |

|---|---|

| Spotify Track ID | 11,454,349 |

| Echonest Track ID | 7,340,920 |

| Rdio Artist ID | 707,620 |

| MusicBrainz Artist ID | 203,923 |

| MusicMatch Artist ID | 73,459 |

| MusicBrainz Album ID | 160,000 |

| Spotify Artist ID | 776,620 |

| MusicBrainz Release Group ID | 70,000 |

| MusicBrainz Track IDs | 450,000+ |

Poster:

Citations:

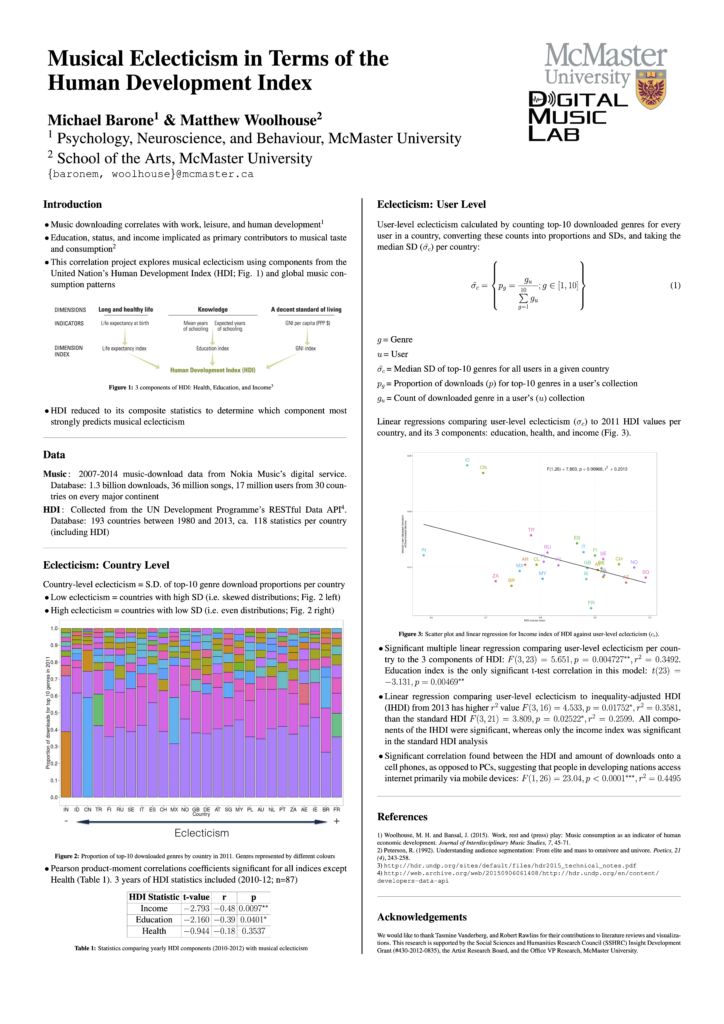

Barone, M. & Woolhouse, M. H. Musical eclecticism in terms of the human development. Poster at 14th International Conference on Music Perception & Cognition. San Francisco, USA, 5-9 July 2016.

Abstract:

Recent sociological research suggests a link between levels of education and musical eclecticism. Our research uses the components of the Human Development Index (HDI) to explore the relationship between music consumption, and human economic development in various countries across the world. Given its scale—the HDI represents the quality of life in a country with a single number—our research uses large-scale meta-analyses of multiple countries rather than individual case studies. We build upon previous research exploring how music consumption is intimately connected to our working lives (Woolhouse & Bansal, 2013). We examine if the 3 component statistics that comprise the HDI correlate with country-level genre-download preferences. A music-consumption database, consisting of over 1.3 billion downloads onto mobile phones from 2007-14, is used to explore the relationship between the HDI and patterns of music listening. The study was made possible with a data-sharing and cooperation agreement between McMaster University and MixRadio, a digital music streaming service. The general method, employed primarily for its statistical simplicity, is to correlate genre-dispersion patterns with HDI components, including education, life expectancy, and income. Significant correlations are reported between components of the HDI and genre eclecticism: Countries with higher income and education values tend to download more diverse genres, and are more eclectic in their genre preferences. Other measures related to the HDI, such as the Inequality-Adjusted HDI, demonstrate similar significant correlations. Levels of human economic development, as represented in the HDI, appear to influence the degree to which people are willing to explore diverse musical genres. Cultural eclecticism, or “omnivorousness”, is arguably a function of access to consumption-based leisure, a life quality afforded to individuals living in countries higher on the HDI spectrum.

Poster:

Citations:

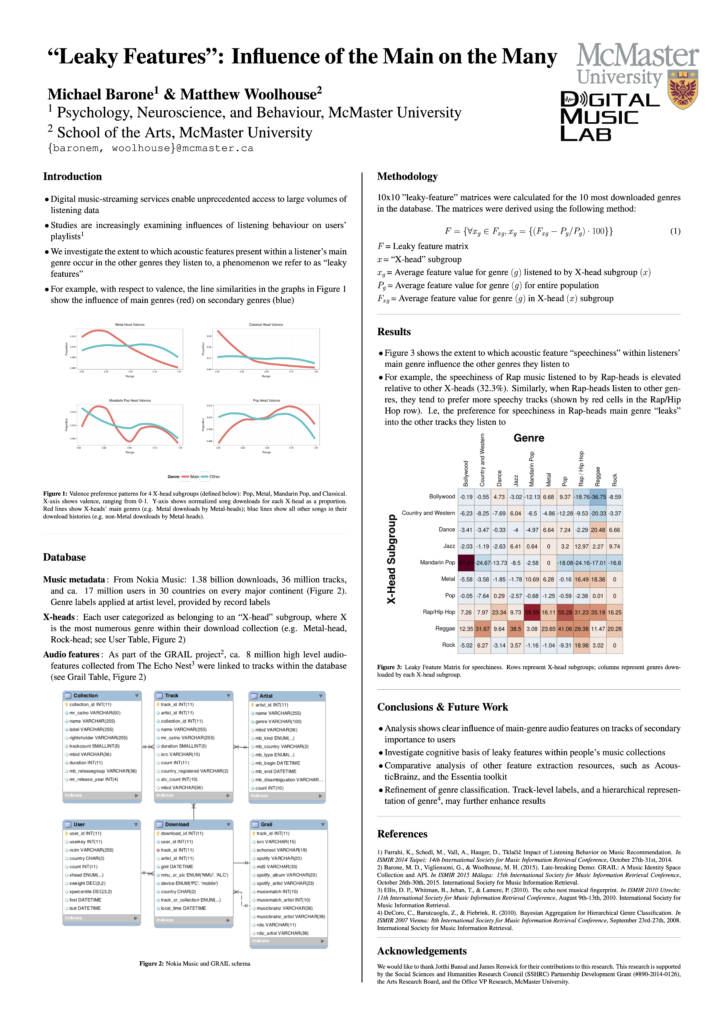

Barone, M. & Woolhouse, M. H. “Leaky Features”: Influence of the main on the many. Poster at 14th International Conference on Music Perception & Cognition. San Francisco, USA, 5-9 July 2016.

Abstract:

The digitization and streaming of music online has resulted in unprecedented access high-quality music-information and user-consumption data. In particular, publicly available resources such as the Echo Nest have analyzed, and extracted audio features for millions of songs. We investigate whether preferences for audio features in a listener’s main genre are expressed in the other genres they download, a phenomenon we refer to as “leaky features”. For example, do people who prefer Metal listen to faster and louder Classical or Country music (Metal typically has a higher tempo than many other genres)? A music-consumption database, consisting of over 1.3 billion downloads onto mobile phones from 2007-14, is used to explore 10 audio features, grouped into 3 categories: rhythmic (danceability, tempo, duration), environmental (liveness, acousticness, instrumentalness), and psychological (valence, energy, speechiness, loudness). The study was made possible with a data-sharing and cooperation agreement between McMaster University and MixRadio, a global digital music streaming service. Audio features for 7 million songs were collected using the Echo Nest API, and linked to the MixRadio database. We found audio features specific to users’ main genres “leaked” into other downloaded genres. Audio features that tend to be more exaggerated in one genre, such as the “speechiness” in Rap music, typically demonstrate more leakiness than genres without this characteristic, such as Classical. “Energy” and “valence” features were also particularly significant. These results show that audio feature extraction algorithms are useful for examining which aspects of a music influence our preference for new music, findings that have implications for music-recommendation systems. Additionally, our research demonstrates a unique approach to conducting psychological research exploring the extent to which musical features remain tied to specific genres.

Poster:

Citations:

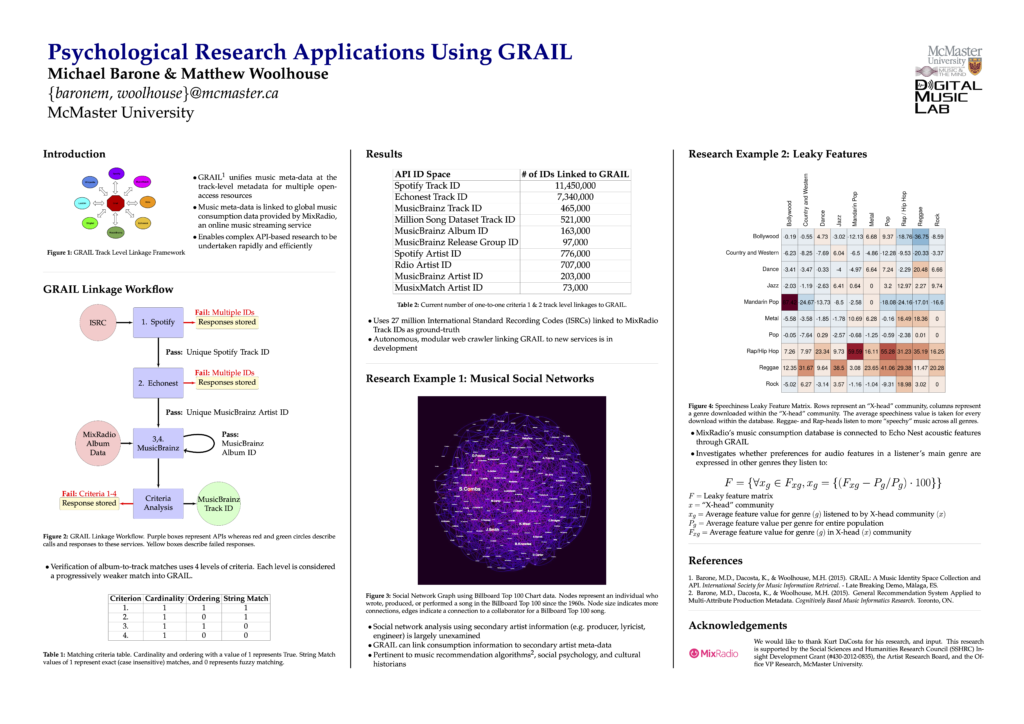

Barone, M. & Woolhouse, M. H. Psychological research applications using GRAIL. Poster at Lake Ontario Visionary Establishment Conference, Niagara, Canada, 4-5 February 2016.

Abstract:

We have created “GRAIL”, a music-track meta-data API that links multiples open-access data resources. For example, music meta-data is linked to global music consumption data provided by MixRadio, an online music streaming service. This enables complex API-based research to be undertaken rapidly and efficiently. GRAIL uses 27 million International Standard Recording Codes (ISRCs) linked to MixRadio Track IDs as ground-truth. Moreover, an autonomous, modular web crawler linking GRAIL to new services is in development. GRAIL allows music social-network analysis to be undertaken using secondary artist information (e.g. producer, lyricist, engineer). GRAIL can also link user consumption information to secondary artist meta-data, which is pertinent to music recommendation algorithms, and enables social psychology, and cultural musical questions to be investigated.

Poster:

Citations:

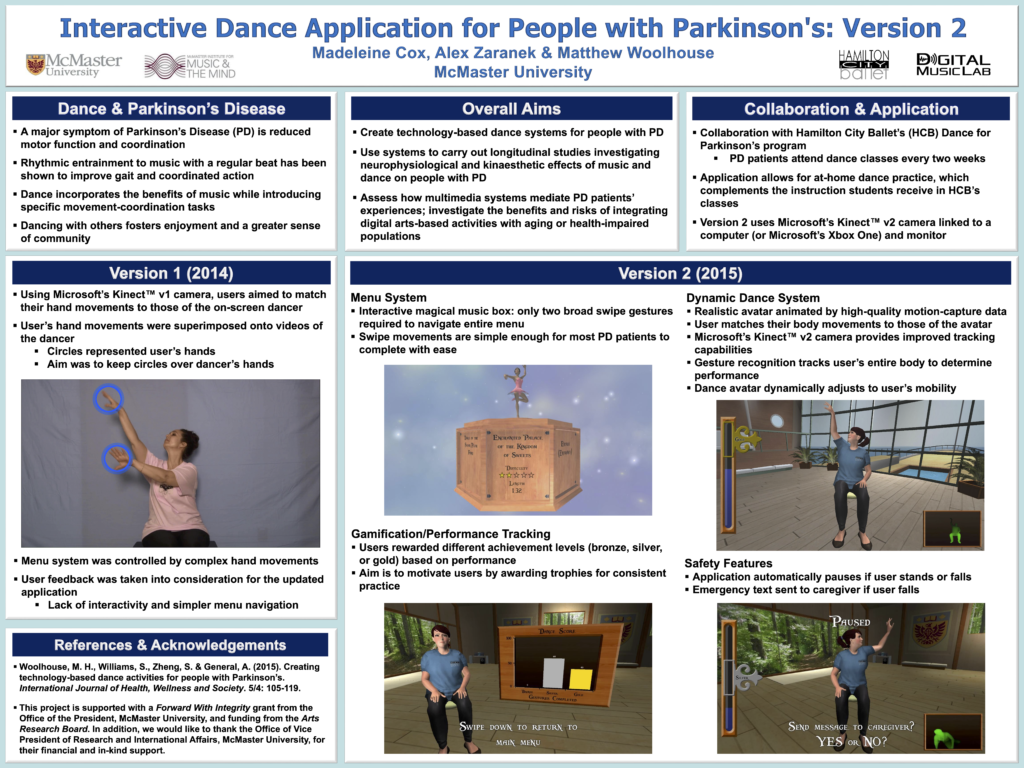

Cox, M., Zaranek, A., & Woolhouse, M. H. Interactive dance application for people with Parkinson’s: Version 2. Poster at NeuroMusic Conference, McMaster University, Canada, 19 November 2015.

Abstract:

There is growing evidence that music and dance have positive therapeutic effects for people with Parkinson’s disease (PD). Exposure to music with a clear rhythm has been shown to improve gait speed, stride length, and coordinated actions. Dancing incorporates the benefits of music while also introducing specific movement-coordination tasks. Hamilton City Ballet’s (HCB) Dance for Parkinson’s Program runs biweekly dance classes for people with PD, using choreography and music to provide these benefits for their students. Similar programs have demonstrated improvements to the students, quality of life, and well-being. Since summer 2014, McMaster Researchers and HCB’s Dance for Parkinson’s Program have been working together to create technology-based dance activities for their students. These activities are designed for home use, between HCB’s regular dance classes. Microsoft’s Kinect TM cameras input participants’ body movements into the system, and calculate users’ progress within each activity. It is hoped that the introduction of technology-based activities closely linked to the Dance for Parkinson’s program will augment and enhance the benefits seen in similar programs.

Poster:

Citations:

Dacosta, K., Barone, M., Vigliensoni, G., & Woolhouse, M. H. GRAIL: A music identity space collection and API. Extended abstract and poster at International Society for Music Information Retrieval Conference (ISMIR). Málaga, Spain, 26-30 October 2015.

Abstract:

Services such as Last.fm, MusicBrainz, and MusixMatch provide valuable access to their comprehensive corpora of music metadata. Although very useful to the academic community, information generated by these services are under-utilized due to a scarcity of accurate ID matches at the track level. We introduce GRAIL, an open API in development that links track IDs from diverse online music services. GRAIL encourages traffic to these services by enabling developers and researchers to innovate through combining music APIs with relative ease. To our knowledge, there are no organized academic efforts attempting to open-source the linkage of track IDs of digital-music services. We describe our scalable architecture and data verification process, as well as explore the challenges in collating digital-music information from disjoint resources.

Poster:

Citations:

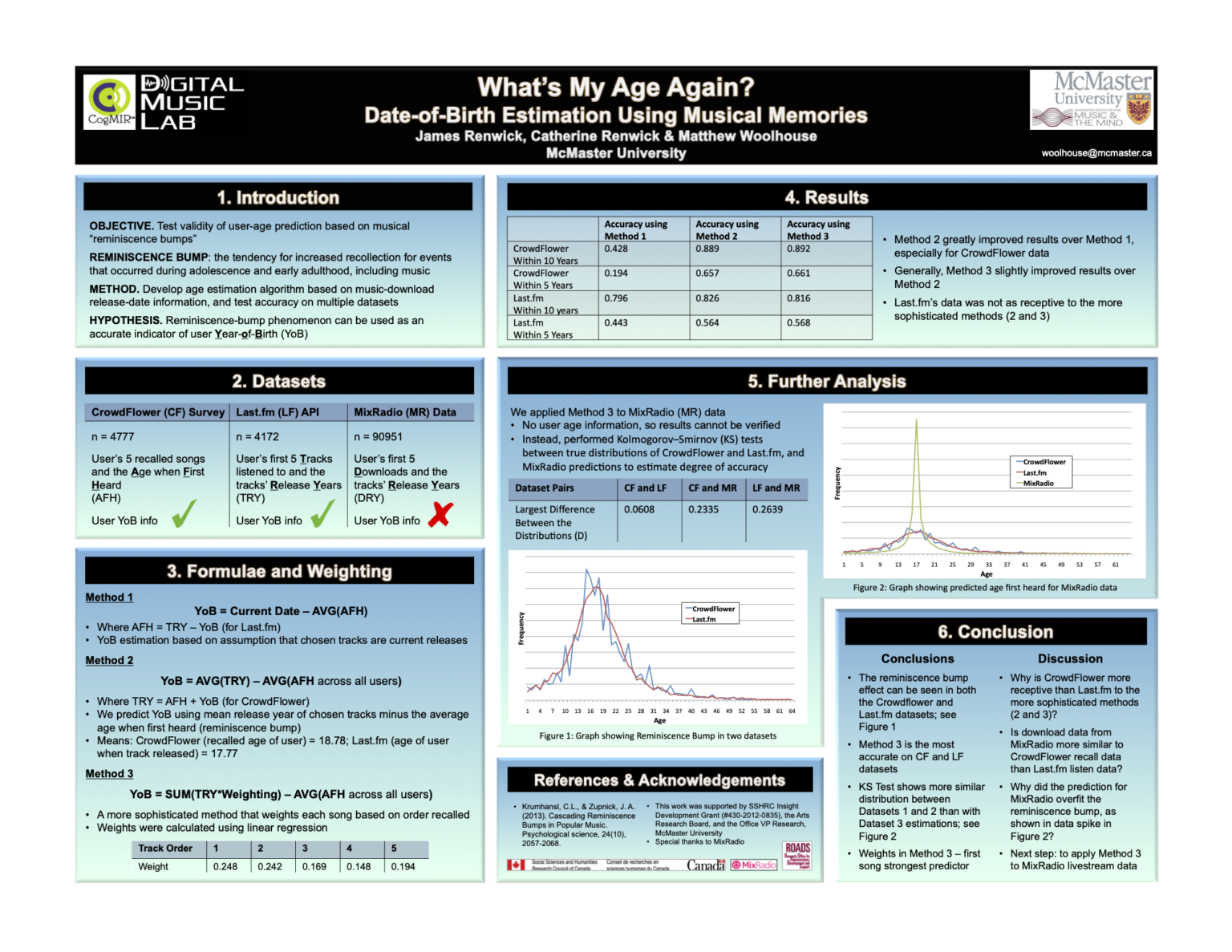

Renwick, J., Renwick, C. & Woolhouse, M. H. What’s my age again? Date-of-birth estimation using musical memories. Poster at Cognitively Based Music Informatics Research Seminar (CogMIR). Ryerson University, Canada, 5 September 2015

Awarded Best Poster

Abstract:

We compare and contrast survey data on musical memories with patterns in digital streaming in an attempt to determine the age of users based on their consumption habits. User data was gathered from two different sources: a crowdsourcing survey and Last.fm’s API. The survey included data from ca 5,000 participants who recalled songs and the age they first heard them. The listening histories of ca 4,000 Last.fm users were recorded. Users from both datasets showed a strong tendency to listen to or recall songs released when they were between 15 and 20 years of age. We link these findings to psychological research showing a tendency for adults to have an increased recollection for events that happened during adolescence. Using a statistical regression model, an algorithm is created to estimate user date of birth based on musical preferences and this psychological effect. Lastly, the algorithm is tested to ascertain the veracity of this method for predicting user age in cases where this information is absent.

Poster:

Citations:

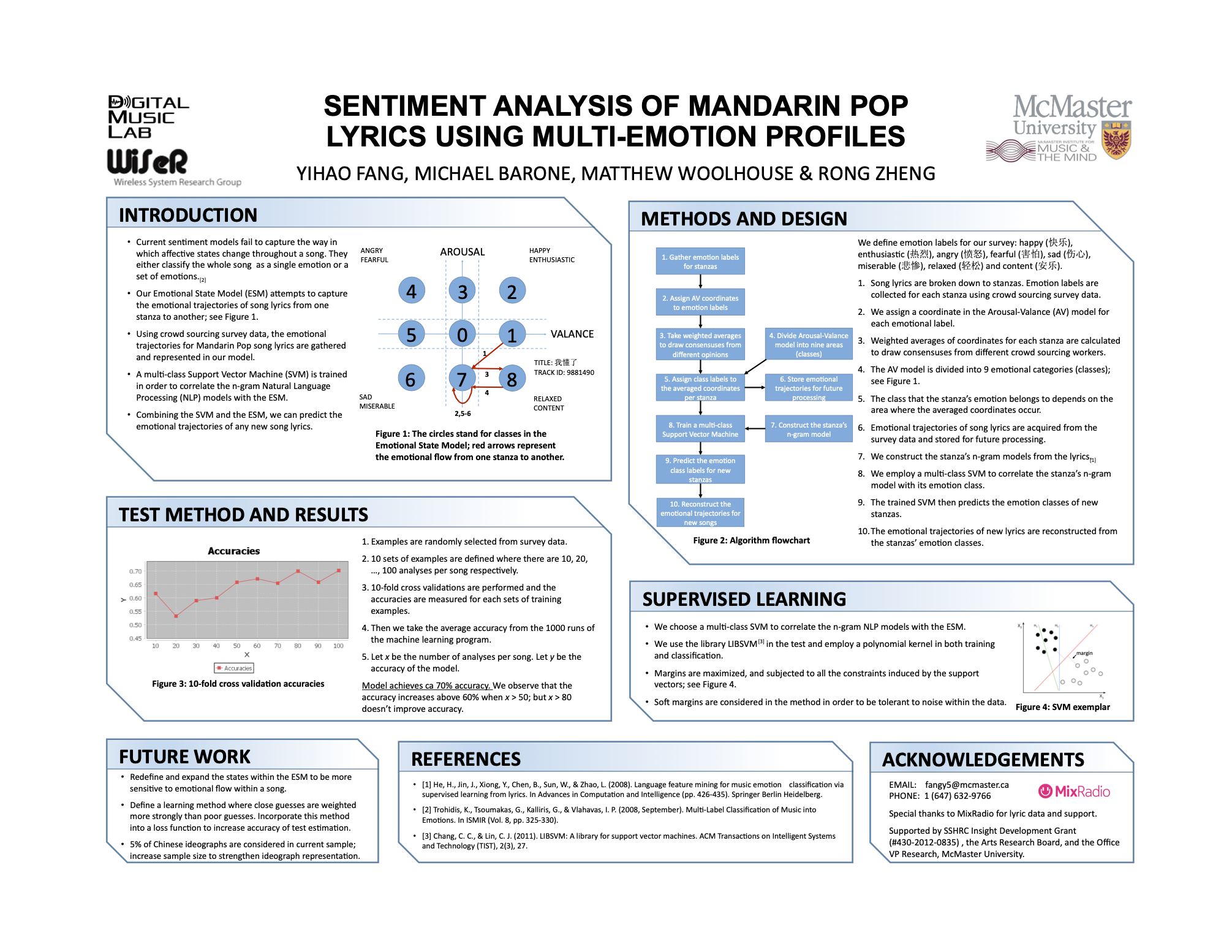

Fang, Y., Barone, M., Woolhouse, M. H. & Zheng, R. Sentiment analysis of Mandarin pop lyrics using multi-emotion profiles. Poster at Cognitively Based Music Informatics Research Seminar (CogMIR). Ryerson University, Canada, 5 September 2015.

Abstract:

Sentiment analysis is a subfield of natural language processing (NLP) which attempts to identify the emotional categories of text. While these techniques have produced impressive findings using machine learning, many issues remain. In particular, automated-sentiment analysis classifies emotional content dichotomously, restricting classification to only one emotion. This categorical approach fails to capture the affective nuances of subjective artistic expression in text. We examine whether general linguistic patterns exist in Mandarin Pop lyrics using multi-emotion profiles coupled with machine learning and NLP. Using crowd sourced survey data, we gather multiple-emotion profiles for Mandarin Pop song lyrics. A multi-class support vector machine, constructed from these profiles and n-gram NLP models, is applied to the song lyrics. K-fold cross validation is then applied to analyze the efficacy of classification in the training data. We report the accuracy of this model for sentiment analysis and discuss implications of future directions based on our findings.

Poster:

Citations:

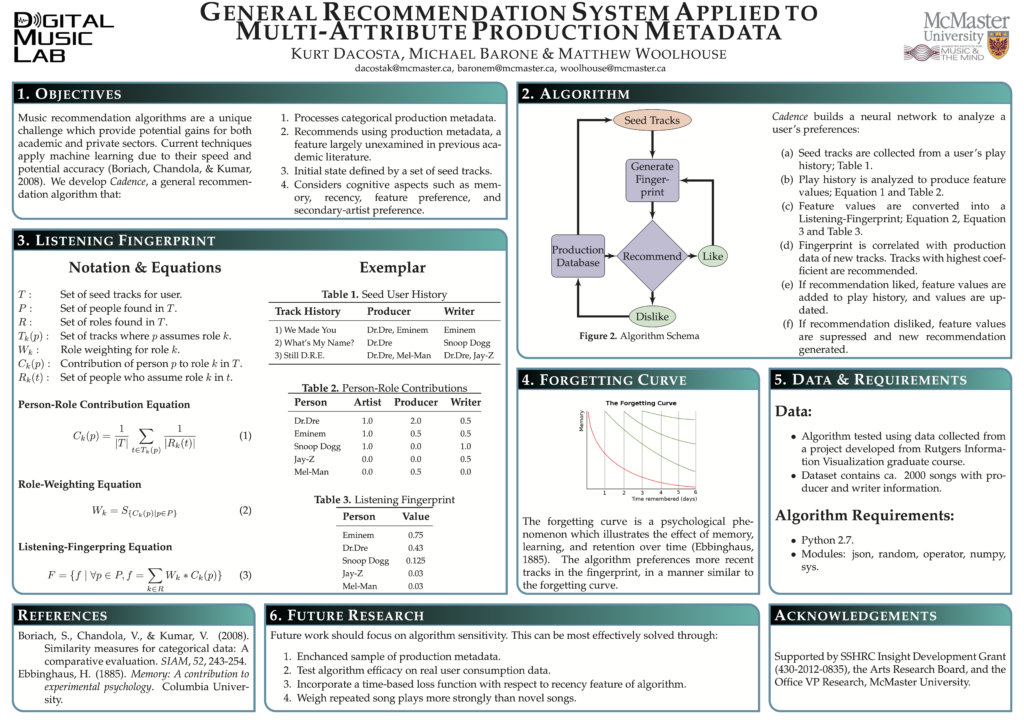

Dacosta, K., Barone, M. & Woolhouse, M. H. Music recommendation using multi-attribute categorical production metadata. Poster at Cognitively Based Music Informatics Research Seminar (CogMIR). Ryerson University, Canada, 5 September 2015.

Abstract:

Recommender systems have been examined quantitatively using a variety of methods including opinion mining, audio feature extraction, and social network analysis. We consider a novel addition to recommender systems using collaborative filtering. A feature space is created based on user preference for production attributes. Production metadata includes roles such as sound engineers, producers, lyricists, and session musicians that are vital to a recording, but are frequently overlooked in its creation. We combine this potentially neglected data with play-history information, including track recency, to create a production-preference profile (PPP) per person. These PPP’s are used to generate similarity weightings with respect to a set of recommended seed tracks. Evaluation of this approach is currently being undertaken.

Poster:

Citations:

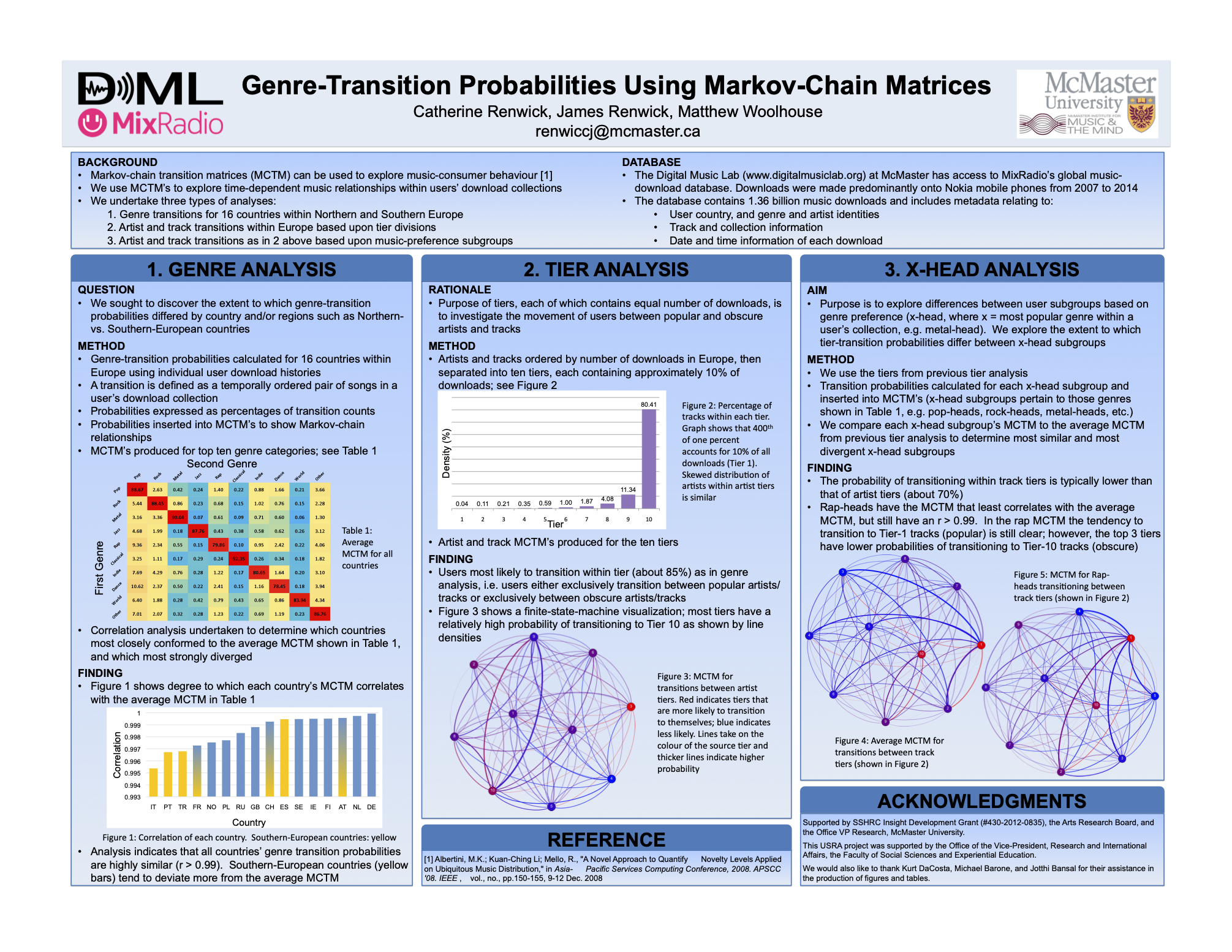

Renwick, C., Renwick, J. & Woolhouse, M. H. Genre transition probabilities using Markov chain matrices. Poster at Cognitively Based Music Informatics Research Seminar (CogMIR). Ryerson University, 5 Canada, September 2015.

Abstract:

We use statistical analysis of users’ digital-music download patterns to determine song, artist and genre transition probabilities. The investigation uses MixRadio’s global music-consumption database consisting of 1.36 billion downloads, which contains detailed temporal information. We classify the transitions between pairs of songs by genre, and derive probabilities representing average-user download trends by country. Probability values are then deployed in a Markov-chain transition matrix to demonstrate the temporal relationships between genres. Perhaps unsurprisingly, we find that users have a strong likelihood of downloading songs of the same genre in succession, ranging from 60-90%. Results also show that in some instances, the number of times a user transitions from one genre to the next can be 10-20 times greater than in the reverse direction. The asymmetry between transition probabilities could be explained by a number of factors, including disparity of genre representation within the database (although normalization procedures discount this to some degree), language barriers, or, more interestingly, that certain genres point users in particular musical directions.

Poster:

Citations:

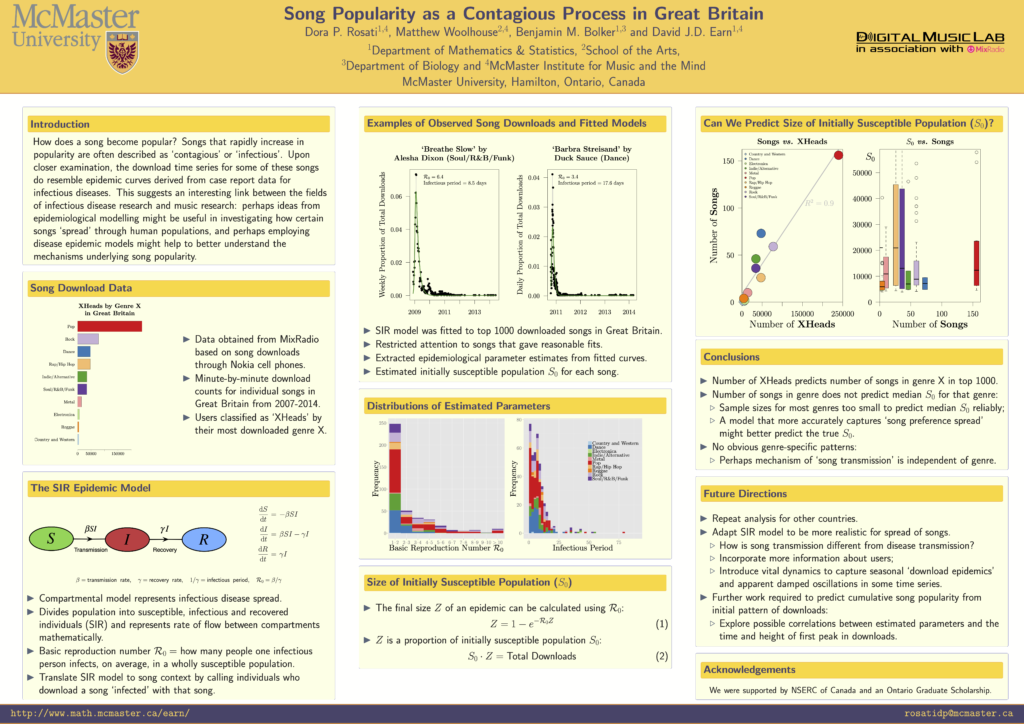

Rosati, D. P., Woolhouse, M. H., Bolker B. M. & Earn, D. J. D. Song popularity as a contagious process in Great Britain. Poster at 13th Annual Ecology and Evolution of Infectious Disease conference. Classical Centre, Athens, Georgia, USA, 26-29 May 2015.

Rosati, D. P., Woolhouse, M. H. & Earn, D. J. D. Song popularity as a contagious process. Poster at Cognitively Based Music Informatics Research Seminar (CogMIR). Ryerson University, Canada, 4 October 2014.

Abstract:

How does a song become popular? Songs that rapidly increase in popularity are often described as ‘contagious’ or ‘infectious’. Upon closer examination, the download time series for some of these songs do resemble epidemic curves derived from case report data for infectious diseases. This suggests an interesting link between the fields of infectious disease research and music research: perhaps ideas from epidemiological modelling might be useful in investigating how certain songs ‘spread’ through human populations, and perhaps employing disease epidemic models might help to better understand the mechanisms underlying song popularity.

Poster:

Citations:

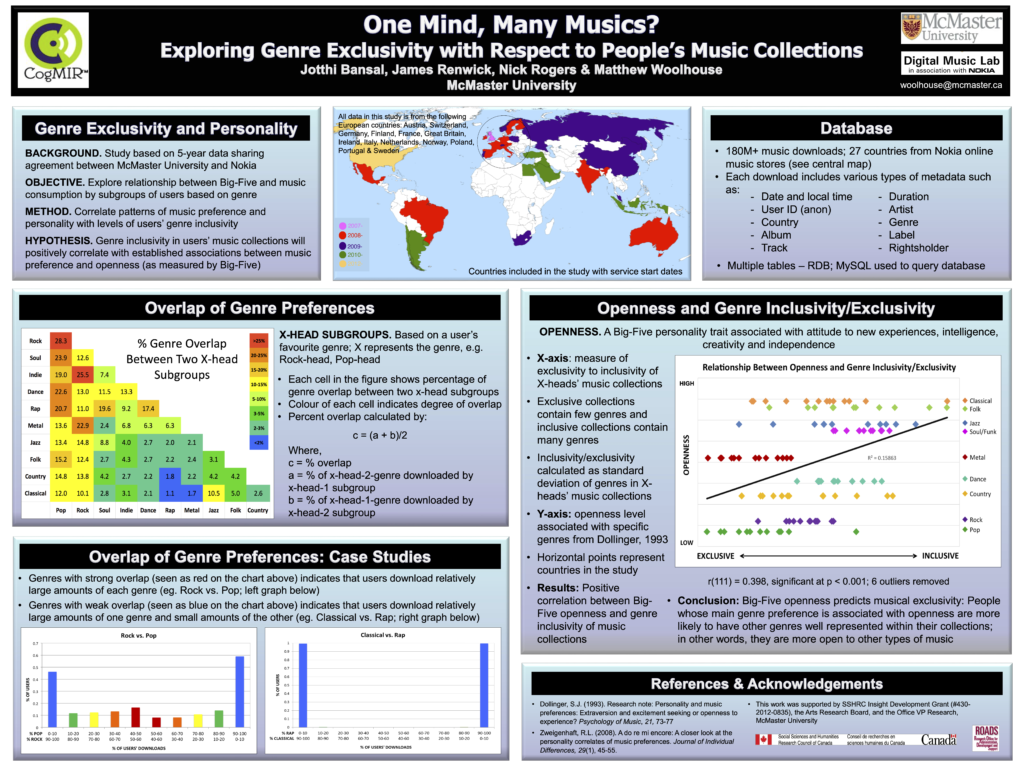

Bansal, J., Renwick, J., Rogers, N. & Woolhouse, M. H. One mind, many musics? Exploring genre exclusivity with respect to people’s music collections. Poster at Cognitively Based Music Informatics Research Seminar (CogMIR). Ryerson University, Canada, 4 October 2014.

Awarded Best Poster #2

Bansal, J., Renwick, J., Rogers, N. & Woolhouse, M. H. Exploring the exclusivity of music genre preference. Poster at 10thNeuroMusic Conference, McMaster University, Canada, 28 September 2014.

Abstract:

This poster examines the link between user consumption and genre preference. Musical genre is typically derived from musical characteristics such as technique, instrumentation and style. Technological advances have allowed listeners to consume music in exciting new ways (for example via their cellular phones). We present compelling graphical evidence and novel analysis of user genre consumption data that highlights the truly multi-dimensional nature of musical preference. Specifically, the poster will outline how we have used data provided by a five-year data-sharing agreement between McMaster University and the Nokia Corporation to explore the following questions: (1) To what extent do consumers of different genres represent distinct populations, and (2) if distinct populations do exist, do the members of different populations exhibit different behaviours with respect to the acquisition of music? Recent studies support the idea that personality traits such as extraversion, creativity, open-mindedness are correlated with musical preference, which implies that users will form distinct groups based on their primary genre preference (North and Hargreaves, 2008; North, Desborough, and Skarstein, 2005). To investigate this assertion, we use a database containing over 180 million downloads from more than 10 million users to group music listeners by their favourite (most downloaded) style of music. In turn, we explore how these populations have distinct download behaviours.

North, A., & Hargreaves, D. (2008). The social and applied psychology of music. OUP Oxford.

North, A. C., Desborough, L., & Skarstein, L. (2005). Musical preference, deviance, and attitudes towards music celebrities. Personality and individual differences, 38(8), 1903-1914.

Poster:

Citations:

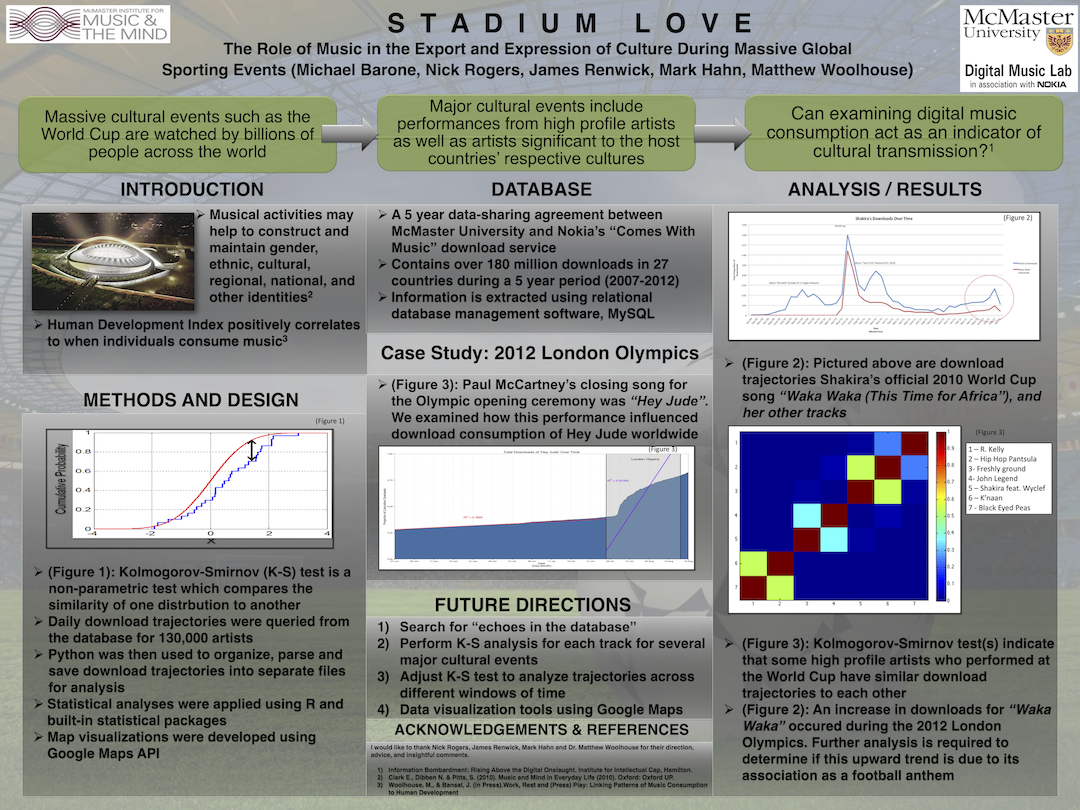

Barone, M. D., Rogers, N., Renwick, J., Hahn, M., Woolhouse, M. H. Stadium love: The role of music in the exportation and expression of culture during massive global sporting events. Poster at Cognitively Based Music Informatics Research Seminar (CogMIR). Ryerson University, 4 October 2014.